💊 Pill of the week

In machine learning, dealing with categorical data is a bit like organizing a wild variety of snacks. You need to categorize them without implying a false relationship between different items. Imagine you have chips, candy, and fruit, but labeling them with numbers like 1, 2, and 3 might trick your model into thinking candy is somehow “greater” than chips. That’s where One-Hot Encoding (OHE) comes in handy—it’s a way to convert these categories into a format that your machine learning model can digest without making assumptions about the order of items.

Written by , revised and expanded by .

What is One-Hot Encoding?

One-Hot Encoding is a technique used to convert categorical data into binary columns. Each category gets its own column, and for any given row, the column corresponding to that category is set to 1, while the others are set to 0. This avoids any false notion of ranking or ordinality between categories.

Let’s imagine we have a “Color” column with values like Red, Green, and Blue. If we just assigned numbers (say, 1 for Red, 2 for Green, and 3 for Blue), we’d unintentionally suggest that Green is somehow greater than Red and Blue. Not good, right?

Instead, One-Hot Encoding creates separate columns for each color:

Red → [1, 0, 0]

Green → [0, 1, 0]

Blue → [0, 0, 1]

Now, our model treats these as independent categories without making assumptions about their relationship.

Here you have another example:

There are more encoders, each of them suitable for a different type of data. You can check them here, a previous DIY issue in which we share both theory and code!

Why Use One-Hot Encoding?

Many machine learning algorithms, like linear regression or neural networks, can’t work with categorical data directly. They require numerical inputs to perform their calculations. If we use label encoding, assigning numerical values to categories, our model may interpret these values as having some rank or order, which isn’t true for many categories (like colors). One-Hot Encoding avoids this issue by making each category its own feature, ensuring the model doesn’t make any wrong assumptions about relationships between categories.

Here is a bit of theoretical explanation mixed with code, but if you want to see all the code, scroll down to the end of the newsletter!

How to Implement One-Hot Encoding in Python

Now that we know what One-Hot Encoding is and why it’s important, let’s dive into how to actually implement it using Python. There are two popular libraries for this: pandas and scikit-learn.

1. Using Pandas’ get_dummies() Function

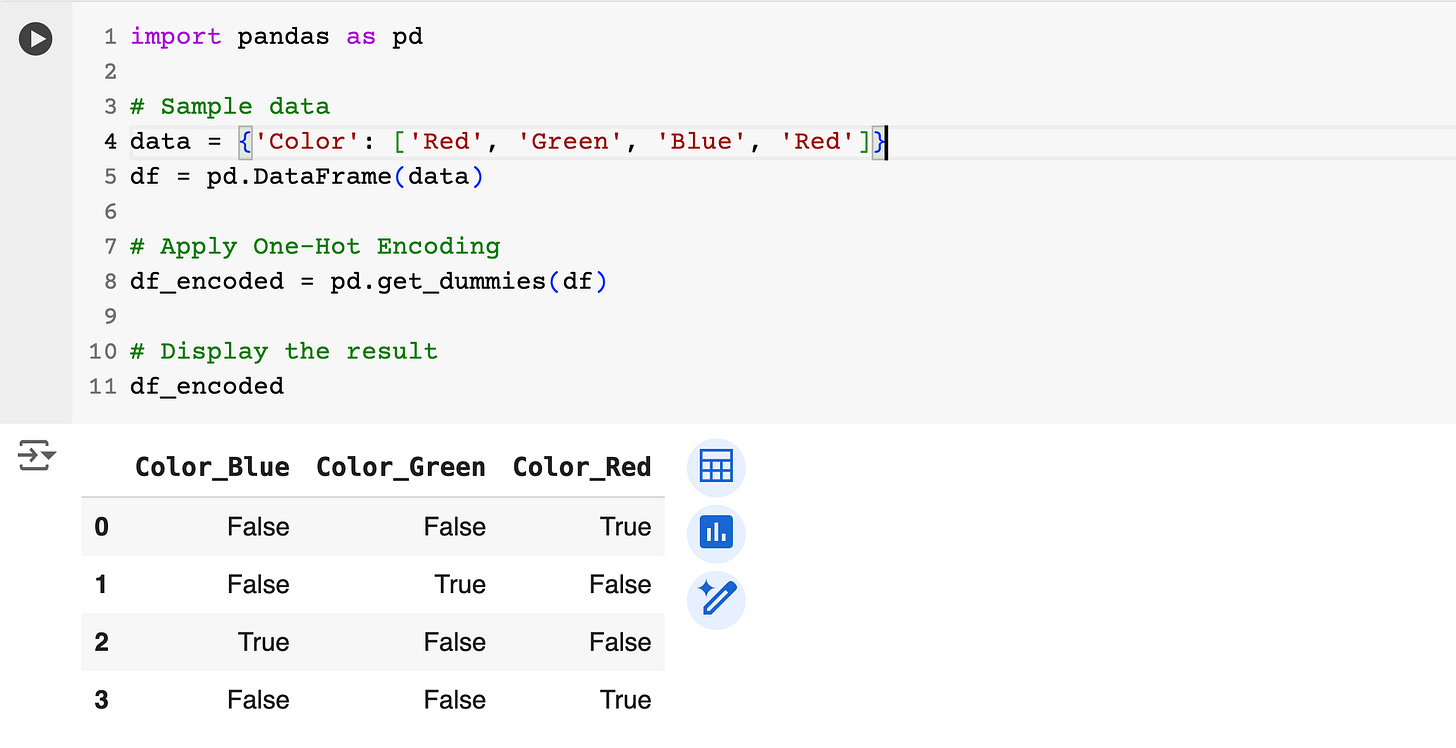

Pandas makes it really easy to apply One-Hot Encoding with the get_dummies() function.

Here’s how it works:

In this example, the get_dummies() function automatically converts the categorical "Color" column into three new binary columns, one for each unique category (Blue, Green, and Red). The values in these columns are True if the color is present and False otherwise. But doing some more pandas manipulation the true’s and false’s can be converted to 0s and 1s.

2. Using Scikit-Learn’s OneHotEncoder

For more control, especially when integrating with machine learning pipelines, scikit-learn offers the OneHotEncoder class, which is highly flexible.

Here’s how you can use it:

In this example, we are using scikit-learn to demonstrate how to use OneHotEncoder to convert categorical columns (Product and Size) into binary columns, where each unique category is represented as a separate column. After encoding, the resulting one-hot encoded data is combined with the original numerical column (Price) to create a final DataFrame that can be used for machine learning models, ensuring both categorical and numerical data are properly formatted for model input.

pd.get_dummies VS sklearn.OneHotEncoder

pd.get_dummies():Best for quick, simple one-hot encoding in pandas.

Ideal for small datasets or exploratory data analysis.

Automatically handles categorical columns in DataFrames.

Not suitable for advanced machine learning pipelines or handling unknown categories.

sklearn.OneHotEncoder:Ideal for larger datasets and machine learning pipelines.

Provides more control (e.g., handling unknown categories, sparse output).

Can be integrated with scikit-learn’s pipelines and models.

Suitable for more complex workflows and production environments.

Common Pitfalls and How to Avoid Them

Increased Dimensionality: One-hot encoding can significantly increase the number of columns when dealing with features that have many categories (like countries or product IDs). This creates a large, sparse dataset, which can slow down model training and lead to overfitting, where the model becomes too tailored to the training data and performs poorly on new data.

If you have a feature with too many categories, you can try dimensionality reduction methods like Principal Component Analysis (PCA) or use feature hashing to reduce the number of columns. These techniques help manage the size of your dataset without losing too much information.

Also, you could just consider only the most common categories. This will depend on your particular usecase and data.

Dummy Variable Trap: When you one-hot encode categories, creating separate columns for each category, it can introduce redundancy. For example, if you have three categories (Red, Green, and Blue), you don’t need all three columns. If both Red and Green are 0, it’s obvious that the color is Blue. This redundancy can confuse some models and lead to multicollinearity, where the model treats these columns as overlapping.

To avoid this, drop one of the categories (for example, drop the "Blue" column). This ensures that the model doesn’t get redundant information. Both

pd.get_dummies()and scikit-learn’sOneHotEncoderoffer options to drop one category automatically (drop_first=Trueordrop='first').

By being mindful of these common pitfalls, you can make sure your one-hot encoded data is efficient and less likely to cause model performance issues.

Why Should You Care About One-Hot Encoding?

One-Hot Encoding isn’t just a minor technical detail—it’s critical for ensuring your machine learning model can interpret categorical features correctly. Without it, your model might make wrong assumptions, leading to:

Poor Predictions: Misinterpreted categorical features can mess up your model’s predictions. For instance, imagine a customer segmentation model where customers are labeled with numbers (e.g., 1 for "VIP", 2 for "Regular", and 3 for "New"). The model might mistakenly assume that "New" customers are more important than "VIP" customers simply because the number 3 is greater than 1, leading to incorrect predictions or prioritizations.

Model Confusion: Algorithms like neural networks or logistic regression cannot handle categorical data directly. Without converting categories to numerical representations, these algorithms will fail to train or make predictions.

By using One-Hot Encoding, you help your model understand the true nature of categorical data without imposing any artificial relationships between categories.

Remember, you have a notebook with all the code shared in this article at the end of the issue!

📖 Book of the week

This week, we feature “Building LLMs for Production: Enhancing LLM Abilities and Reliability with Prompting, Fine-Tuning, and RAG“, by Louis-François Bouchard and Louie Peters.

The book is aimed at developers, AI engineers, and tech enthusiasts looking to build and scale LLM applications. It is also perfect for AI practitioners eager to dive deep into practical techniques like RAG, fine-tuning, and optimizing LLM models for enhanced accuracy and scalability.

Master LLM Concepts and Frameworks: The book serves as an all-in-one resource, guiding you from LLM theory to practical application. You’ll explore frameworks like LangChain and LlamaIndex, learning how to build robust, scalable AI solutions. Each chapter covers essential topics like prompting, retrieval-augmented generation (RAG), fine-tuning, and deployment.

Hands-on Experience with Real-World Code Projects: With detailed examples and Colab notebooks, you can apply the concepts in real-time, working on code projects designed to solve real-world challenges.

Unlock New Applications of AI: The book covers everything from LLM architecture to advanced techniques like fine-tuning and RAG. You’ll learn how to apply LLMs across a variety of use cases, from chatbots and summarization to advanced question-answering systems.

Roadmap for Production-Ready AI: Beyond the basics, this guide equips developers with the skills to build and optimize applications for real-world use, focusing on the critical aspects of scalability, reliability, and performance.

⚡Power-Up Corner

While One-Hot Encoding seems simple, there are a few advanced techniques that can make it even more effective in your machine learning projects.

Keep reading with a 7-day free trial

Subscribe to Machine Learning Pills to keep reading this post and get 7 days of free access to the full post archives.