Issue #53 - Support Vector Machines

💊 Pill of the week

Let’s take a break from Time Series and ARIMA… Today we will introduce Support Vector Machines! Support Vector Machines (SVMs) are a type of supervised learning algorithm that have garnered significant attention in the field of machine learning. These versatile models are designed to tackle both classification and regression problems, making them a valuable tool in the data scientist's arsenal.

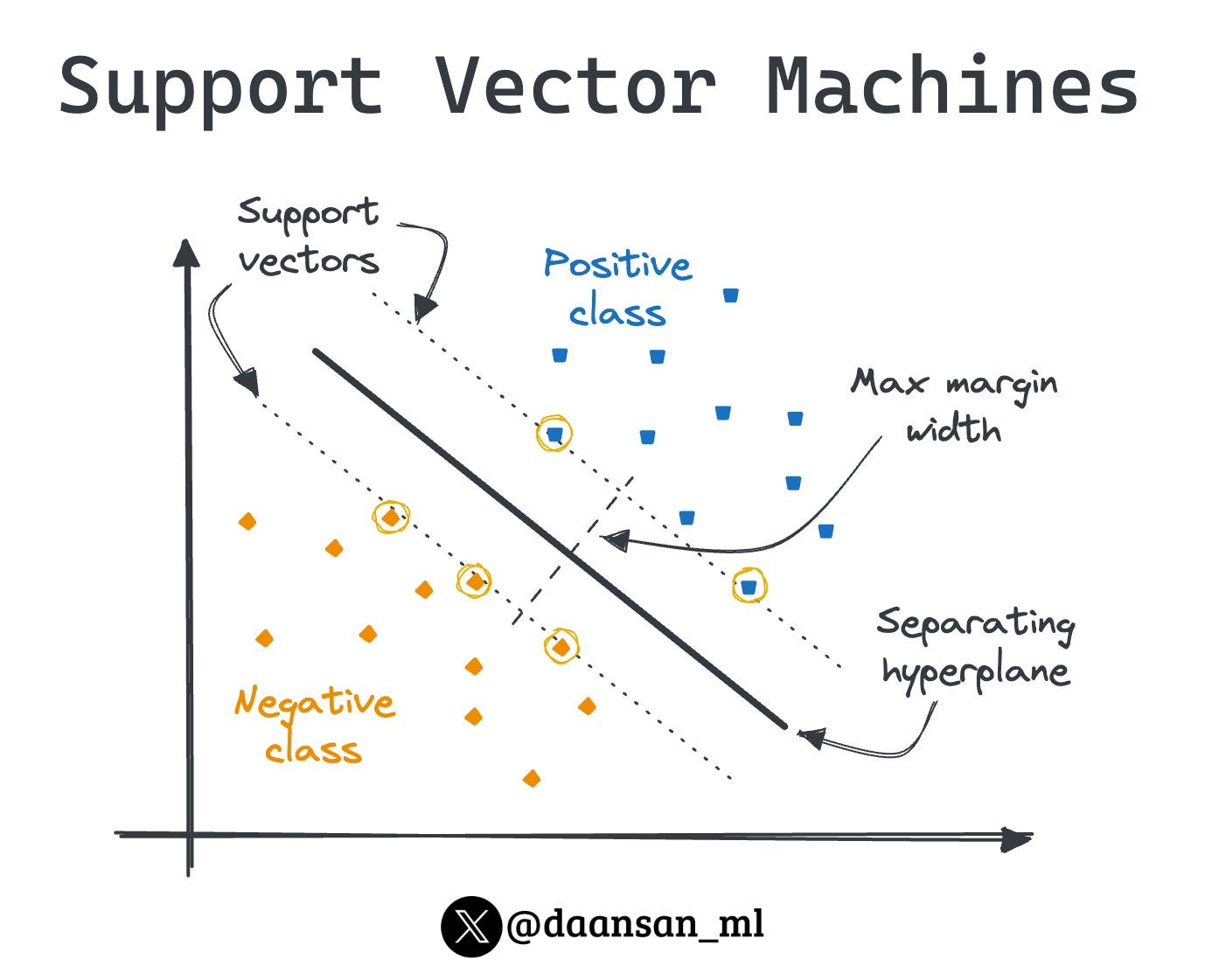

At the core of SVMs is the fundamental goal of finding the optimal boundary, known as the “separating hyperplane” or “maximum margin hyperplane”, that best separates different classes of data. This boundary is determined by the data points closest to it, aptly named “support vectors”. By transforming the data into a higher-dimensional space, SVMs can handle non-linear relationships with ease, a feat that sets them apart from traditional linear models.

How does it work?

The working mechanism of SVMs can be described as follows:

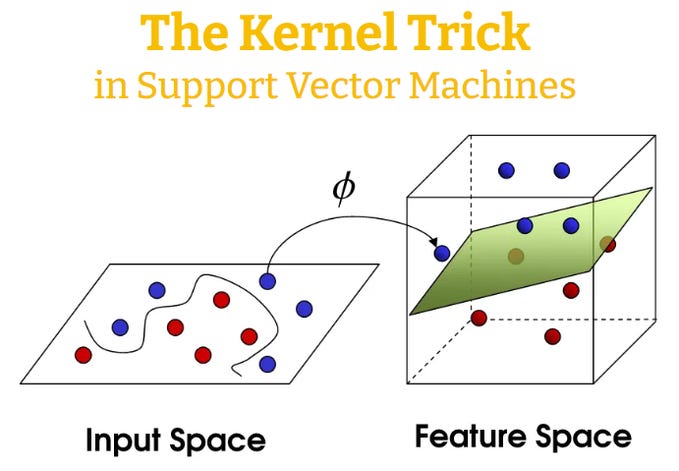

Data Transformation: SVMs begin by transforming the input data into a higher-dimensional feature space, where the data can be more easily separated by a linear hyperplane.

Kernel Trick: To efficiently perform this transformation, SVMs utilize the "kernel trick." Instead of explicitly transforming the data into the higher-dimensional space, the kernel function computes the inner product of the transformed data points, without the need to know the actual transformation. This allows SVMs to work with high-dimensional feature spaces without significantly increasing the computational complexity.

Boundary Optimization: The algorithm then seeks to find the optimal hyperplane that maximizes the margin between the different classes of data. This hyperplane is known as the "maximum margin hyperplane," and the data points closest to it are identified as the "support vectors."

Classification: Once the optimal hyperplane is determined, new data points can be classified by simply evaluating which side of the hyperplane they fall on.

The ability to handle non-linear relationships through the kernel trick is a key advantage of SVMs, as it allows them to capture complex underlying patterns in the data without explicitly transforming the input features.

You can read more about the “kernel trick” in this Twitter thread:

When to Use it?

SVMs are particularly well-suited for the following scenarios:

High-Dimensional Data: SVMs excel in high-dimensional data environments, where they can effectively navigate a large number of features even with relatively small datasets.

Non-Linear Relationships: When the relationship between the input features and the target variable is non-linear, SVMs can effectively capture these complexities through the use of kernel functions.

Small to Medium-Sized Datasets: While SVMs can handle large datasets, they are especially useful when the available data is limited, as they can still perform well and avoid overfitting.

Pros and Cons

Pros:

Effective in high-dimensional spaces and can handle a large number of features.

Capable of capturing non-linear relationships through the use of kernel functions.

Perform well with small to medium-sized datasets, avoiding overfitting.

Produce a clear decision boundary that can be easily interpreted.

Cons:

The choice of kernel function and regularization parameter can significantly impact the model's performance, requiring careful tuning.

Computationally expensive for large datasets, as the training process involves solving a quadratic optimization problem.

Less intuitive and more complex to understand compared to some other machine learning algorithms.

Python Implementation

Here's a basic example of using SVMs for classification using the scikit-learn library:

from sklearn.svm import SVC

# Create the SVM classifier

model = SVC()

# Train the model

model.fit(X, y)

# Predict using the trained model

predictions = model.predict(X)In this example, we use the SVC class from the scikit-learn library to create the SVM classifier. We then train the model on our labelled data and make predictions using the trained model.

If you want to use SVMs for regression tasks instead of classification, you need to use the SVR (Support Vector Regressor) class from scikit-learn. Here is an example:

from sklearn.svm import SVR

# Create the SVR model

model = SVR(kernel='rbf', C=1, epsilon=0.1)

# Train the model

model.fit(X_train, y_train)

# Make predictions on the test set

y_pred = model.predict(X_test)In this example, we use the SVR class from the scikit-learn library to create the support vector regression model. We then train the model on the generated regression dataset and make predictions on the test set, evaluating the performance using the Mean Squared Error (MSE) metric.

The key differences between the classification and regression examples are:

For regression, we use the

SVRclass instead ofSVC.The target variable

yis a continuous value instead of a categorical label.

Interpreting the Results

The key outputs from an SVM model that can be interpreted are:

Support Vectors: The data points closest to the maximum margin hyperplane, which are the most influential in determining the decision boundary.

Hyperplane Coefficients: The coefficients that define the maximum margin hyperplane, which can provide insights into the relative importance of different features.

Margin: The distance between the maximum margin hyperplane and the nearest data points, which represents the confidence in the model's predictions.

You can extract them from your trained model like this:

# Support vectors

support_vectors = model.support_vectors_

# Hyperplane coefficients

hyperplane_coef = model.coef_

# Margin

margin = model.score(X, y)By analyzing these outputs, we can gain valuable insights into the underlying relationships in the data and the decision-making process of the SVM model.

In conclusion, Support Vector Machines are a powerful and versatile class of machine learning algorithms that have proven to be effective in a wide range of applications. Their ability to handle non-linear relationships, high-dimensional data, and small to medium-sized datasets make them a valuable addition to the data scientist's toolkit.

🎓Learn Real-World Machine Learning!*

Do you want to learn Real-World Machine Learning?

Data Science doesn’t finish with the model training… There is much more!

Here you will learn how to deploy and maintain your models, so they can be used in a Real-World environment:

Elevate your ML skills with "Real-World ML Tutorial & Community"! 🚀

Business to ML: Turn real business challenges into ML solutions.

Data Mastery: Craft perfect ML-ready data with Python.

Train Like a Pro: Boost your models for peak performance.

Deploy with Confidence: Master MLOps for real-world impact.

🎁 Special Offer: Use "MASSIVE50" for 50% off.

*Sponsored

🤖 Tech Round-Up

No time to check the news this week?

This week's TechRoundUp comes full of AI news. From Anthropic's Ethical Dilemma to the AI chip innovation race, the future is zooming towards us!

Let's dive into the latest Tech highlights you probably shouldn’t this week 💥

1️⃣ Unlocking AI's Ethical Dilemma

Anthropic researchers have unveiled a new "many-shot jailbreaking" technique, potentially allowing LLMs to bypass ethical guidelines with repeated, less harmful questions.

2️⃣ Meta's Bold Move for Digital Integrity

Meta announces an expansion in labeling AI-generated imagery across Facebook, Instagram, and Threads. This move aims to enhance transparency and combat misinformation, especially in an election-packed year.

EU and US are joining forces in the AI arena, promising increased cooperation on AI safety, standards, and R&D. This collaboration highlights the global emphasis on regulating and harnessing AI's potential responsibly.

OpenAI is broadening its custom model training program, reflecting the growing demand for personalized AI solutions. This expansion signifies OpenAI's commitment to making AI more accessible and tailored to specific needs.

5️⃣ Defying the Odds in the AI Chip Race

Amidst a challenging funding climate for AI chip startups, Hailo stands out, pushing forward with innovations that defy the odds.

This resilience is a beacon for startups navigating the competitive AI landscape.