💊 Pill of the week

In the dynamic field of conversational AI, managing memory is a cornerstone for enabling natural, coherent, and contextually relevant interactions. Memory, in this context, refers to the system's ability to remember and utilize information from past exchanges to inform and enhance future interactions.

Effective memory management allows conversational agents to:

maintain context

understand user intents more accurately

deliver personalized responses

which is crucial for a seamless user experience.

Memory in conversational AI can be compared to human memory in conversation. Just as people remember previous parts of a conversation to make their responses relevant and coherent, AI systems must retain and reference past interactions to maintain the flow and context of the dialogue.

Without memory, interactions would be disjointed and repetitive, leading to user frustration and decreased efficacy of the AI system.

Let's explore different approaches to handling conversation history and context in AI, examining their advantages, potential applications, and implementation details:

Entire Conversation History Memory

Sliding Window Memory

Token-Limited Memory

Summarized Memory

We will focus on this issue on the first two:

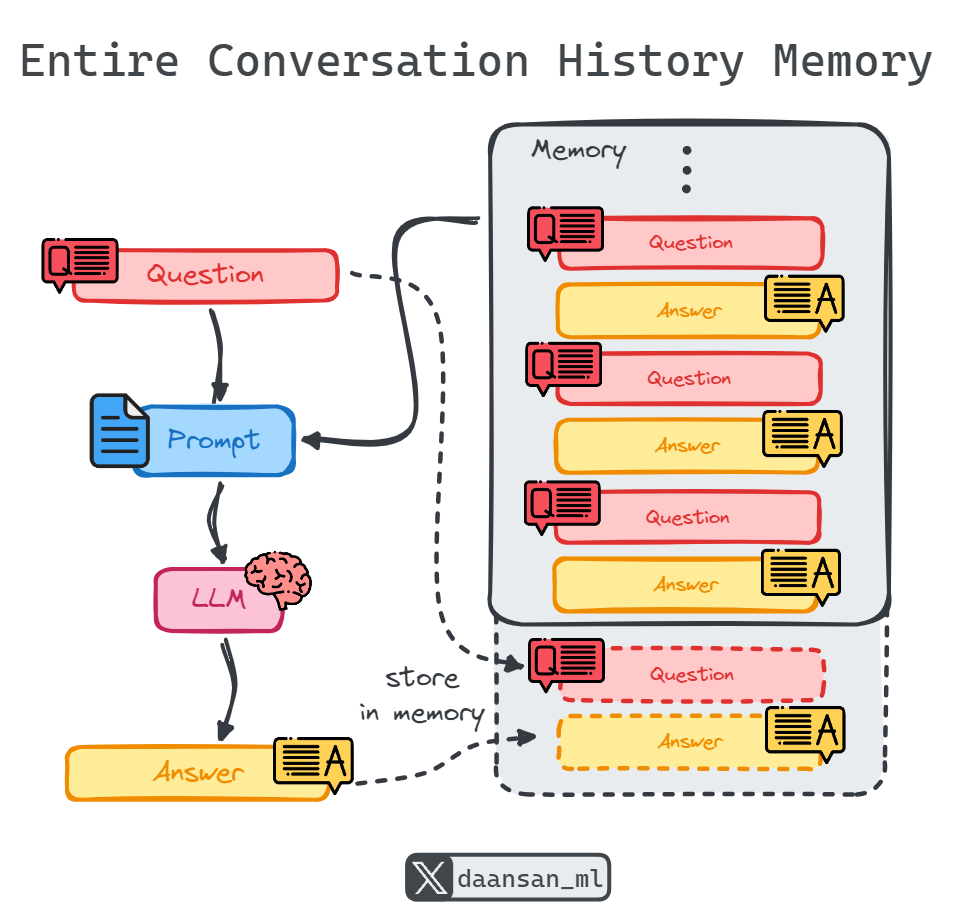

Entire Conversation History Memory

The most straightforward method involves storing the entire conversation history, remembering all previous inputs and outputs. This comprehensive approach ensures the AI has access to the full context of the interaction, which is crucial for providing informed and accurate responses.

Advantages:

Complete Context Preservation: By retaining the entire conversation, the AI can generate responses that are coherent and relevant, as it has all the necessary background information.

Detailed Interaction Handling: Essential for applications where understanding the full context is critical, such as in-depth customer support or complex problem-solving scenarios.

Example Use Case:

A customer support chatbot that needs to refer to the entire conversation history to provide accurate and contextualized responses, ensuring the user doesn't have to repeat information.

Implementation in LangChain:

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory()Sliding Window Memory

This approach involves storing only a specific number of recent exchanges, maintaining a sliding window of the most recent inputs and outputs. This allows the AI to focus on the latest context while still considering some conversational history.

Keep reading with a 7-day free trial

Subscribe to Machine Learning Pills to keep reading this post and get 7 days of free access to the full post archives.