💊 Pill of the Week

In the world of Natural Language Processing (NLP) and particularly in Retrieval-Augmented Generation (RAG) systems, the ability to effectively handle large documents is crucial. This is where text chunking comes into play. Today we will explore the concept of text chunking, its importance in RAG systems, various methods of implementation, and considerations for optimal use.

What is Text Chunking?

Text chunking, also known as text splitting, is the process of breaking down large documents or texts into smaller, more manageable pieces called "chunks." These chunks are typically designed to be self-contained units of information that can be processed and retrieved independently.

The Importance of Chunking in RAG

In RAG systems, chunking plays a vital role in several stages of the process:

Document Ingestion and Preprocessing: Chunking is a key step in preparing documents for use in a RAG system.

Indexing: Each chunk is indexed, usually by converting it into a vector representation.

Retrieval: The system searches for relevant chunks based on a query, allowing for more precise retrieval.

Context Formation: Relevant chunks are used to form the context for the language model.

Generation: The language model uses the retrieved chunks as additional context for generating a response.

Read more about RAG in this previous issue:

How Text Splitters Work?

Text splitters in RAG systems typically follow this process:

Split the text into small, semantically meaningful pieces (often sentences).

Combine these small pieces into larger chunks until reaching a certain size.

Once the size limit is reached, create a new chunk, often with some overlap to maintain context.

Text splitters can be customized along two axes:

How the text is split

How the chunk size is measured

Types of Text Splitters in LangChain

LangChain offers various text splitters, each with its own strengths and use cases:

Recursive Text Splitter: Recursively splits text into chunks, aiming to keep related pieces together without adding metadata.

HTML Text Splitter: Splits text based on HTML structure, adding metadata about the origins of each chunk.

Markdown Text Splitter: Divides text based on Markdown headers, preserving structure and adding header-level metadata.

Code Text Splitter: Splits text based on the syntax of various programming languages, useful for processing code.

Token Text Splitter: Splits text based on token count, offering flexibility with various methods to measure tokens.

Character Text Splitter: Splits text based on a specific user-defined character, such as a newline or space.

Semantic Chunker: Splits text into sentences and then combines them based on semantic similarity using embeddings.

AI21 Semantic Text Splitter: Identifies and splits text into coherent pieces based on distinct topics, adding relevant metadata.

🚨In the free version of this issue we will share only 5 of them, for the remaining ones please consider becoming a paid subcriber:

For the full issue you can check here:

Recursive Text Splitter

Classes: RecursiveCharacterTextSplitter, RecursiveJsonSplitter

Splits on: User-defined characters

Adds metadata: No

Description: Recursively splits text, trying to keep related pieces together. Recommended for general use.

from langchain_text_splitters import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=800,

chunk_overlap=100,

length_function=len,

is_separator_regex=False,

)

chunks= text_splitter.create_documents(docs)HTML Text Splitter

Classes: HTMLHeaderTextSplitter, HTMLSectionSplitter

Splits on: HTML-specific characters

Adds metadata: Yes

Description: Splits text based on HTML structure, adding relevant information about chunk origins.

from langchain_text_splitters import HTMLHeaderTextSplitter

headers_to_split_on = [

("h1", "Header 1"),

("h2", "Header 2"),

("h3", "Header 3"),

]

html_splitter = HTMLHeaderTextSplitter(

headers_to_split_on=headers_to_split_on

)

html_header_splits = html_splitter.split_text(html_string)This can then be pipelined to another splitter (like the recursive one).

Markdown Text Splitter

Class: MarkdownHeaderTextSplitter

Splits on: Markdown-specific characters

Adds metadata: Yes

Description: Splits text based on Markdown structure, adding information about chunk origins.

Its implementation is similar to the previous one:

from langchain_text_splitters import MarkdownHeaderTextSplitter

headers_to_split_on = [

("#", "Header 1"),

("##", "Header 2"),

("###", "Header 3"),

]

markdown_splitter = MarkdownHeaderTextSplitter(

headers_to_split_on=headers_to_split_on

)

md_header_splits = markdown_splitter.split_text(markdown_document)Code Text Splitter

Only for paying subscribers

For the full issue you can check here:

Token Text Splitter

Multiple classes available

Splits on: Tokens

Adds metadata: No

Description: Splits text based on token count, with various methods to measure tokens.

Here we will use the tiktoken splitter, howerver there are many other methods that you can use.

from langchain_text_splitters import TokenTextSplitter

text_splitter = TokenTextSplitter(chunk_size=10, chunk_overlap=0)

chunks = text_splitter.split_text(docs)Character Text Splitter

Class: CharacterTextSplitter

Splits on: User-defined character

Adds metadata: No

Description: A simple method that splits text based on a specific character.

from langchain_text_splitters import CharacterTextSplitter

text_splitter = CharacterTextSplitter(

separator="\n\n",

chunk_size=1000,

chunk_overlap=200,

length_function=len,

is_separator_regex=False,

)

chunks = text_splitter.create_documents(docs)Semantic Chunker

Only for paying subscribers

For the full issue you can check here:

AI21 Semantic Text Splitter

Only for paying subscribers

Choosing the Right Text Splitter

When selecting a text splitter for your RAG system, consider the following factors:

Document structure: Choose a splitter that aligns with your document's format (e.g., HTML, Markdown, code).

Semantic coherence: Opt for splitters that maintain the semantic relationship between chunks.

Metadata requirements: If you need additional context about chunk origins, choose splitters that add metadata.

Language specificity: For code documents, use language-specific splitters.

Token limits: Consider your model's token limits when setting chunk sizes.

Overlap Consideration: When maintaining context between chunks is critical, configure overlap settings.

What is Overlapping?

Overlapping in text chunking refers to the practice of including a portion of the previous chunk's content in the beginning of the next chunk. This creates a "overlap" of information between adjacent chunks.

Why is this interesting?

Maintains Context

Preserves semantic continuity between chunks

Helps in understanding content that spans chunk boundaries

Improves Retrieval Accuracy

Increases the chances of retrieving relevant information that might be split across chunks

Enhances Language Model Performance

Provides more context for language models when generating responses

Handles Cross-References

Helps in situations where information in one part of the text refers to another

Why Metadata matters in RAG?

Metadata is additional information that describes or gives context to the primary data (in this case, the text chunks). It provides supplementary details about the content, source, structure, or other attributes of the data.

Source Tracking

Helps identify the original document or source of each chunk

Crucial for attribution and fact-checking

Context Preservation

Provides additional context that might be lost in chunking

Can include information about document structure, headers, or section titles

Relevance Assessment

Aids in determining the relevance of chunks to specific queries

Can include keywords, topics, or categories

Version Control

Tracks different versions or updates to documents

Ensures the most up-to-date information is used

Filtering and Sorting

Allows for more precise filtering of chunks based on various criteria

Enables sorting of results based on metadata attributes

Data Governance and Compliance

Tracks data ownership, access permissions, and usage rights

Crucial for maintaining compliance with data protection regulations

Enhanced Retrieval

Enables more sophisticated retrieval strategies beyond simple text matching

Can leverage metadata for semantic or category-based searches

User Experience

Provides additional information to users about the retrieved content

Can be used to display snippets, summaries, or other contextual information

Model Fine-tuning

Can be used as additional features for fine-tuning retrieval or language models

Helps models understand the structure and context of the data

Data Quality Management

Helps in assessing and maintaining the quality of the document collection

Can include information about data quality, completeness, or reliability

Conclusion

Effective chunking and text splitting are fundamental to building high-performance RAG systems. By choosing the right text splitter and fine-tuning its parameters, you can significantly improve the retrieval precision and generation quality of your RAG pipeline. As you develop your system, experiment with different splitting strategies to find the optimal approach for your specific use case.

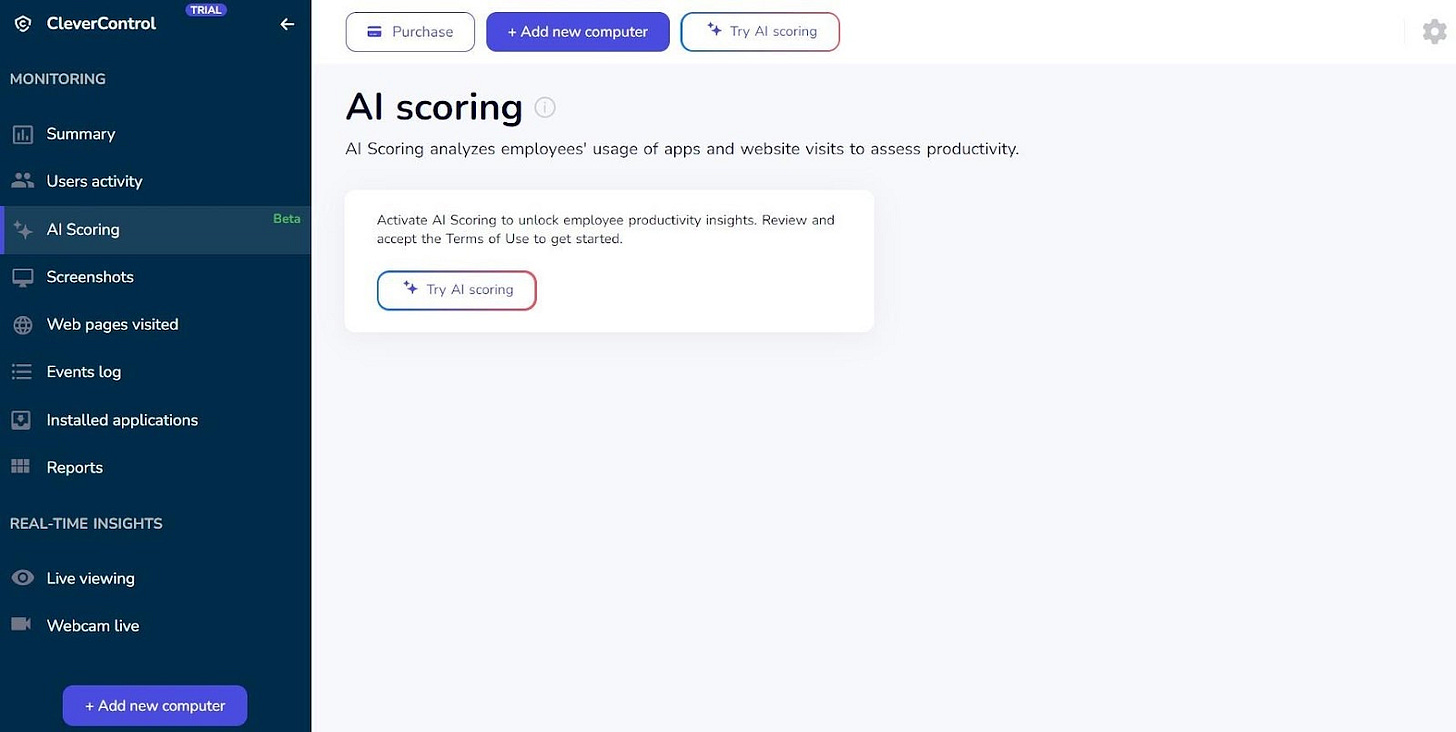

🚀CleverControl: AI-Powered Insights for Enhanced Productivity & Security

This MLPills issue has been sponsored by CleverControl. If you don’t want to see this type of ads that support my work, please consider becoming a paid subcriber:

As a blogger who values innovation, I'm impressed by how CleverControl uses AI to power employee monitoring software.

Key features:

AI-powered insights: Real-time data analysis for improved performance and productivity.

Personalized recommendations: Tailored guidance for each employee to optimize workflow and reach full potential.

Comprehensive reports: Detailed reports on employee activity, including website usage, keyboard activity, and idle time.

Enhanced security: Robust features like data encryption, access controls, and real-time alerts to protect sensitive information.

Live viewing and screen recording: Monitor employee activity in real-time or review recordings later for dispute resolution and feedback.

Face recognition: Ensures only authorized employees access company devices and systems.

Workflow tracking: Helps employees track their own workflow, identify areas for improvement, and stay on top of tasks.

CleverControl empowers businesses to improve productivity, enhance security, and empower employees to reach their full potential.

👉 Get more information here.

🤖 Tech Round-Up

No time to check the news this week?

This week's TechRoundUp comes full of AI news. From Andrew Ng's new AI fund to Gmail's new Gemini feature.

Let's dive into the latest Tech highlights you probably shouldn’t this week 💥

Andrew Ng is raising $120M for his next AI fund! This initiative aims to support startups tackling significant AI challenges. It's a big move for the AI community.

2️⃣ Meta's AI Chatbots on Instagram

Meta is testing user-created AI chatbots on Instagram. Users can now customize chatbots to interact with followers, enhancing engagement and user experience.

3️⃣ ChatGPT for Mac

Mac users, rejoice! ChatGPT is now available on Mac, bringing seamless AI interaction to your desktop. Enhance your productivity with this powerful tool.

Reddit is implementing changes to protect against AI crawlers. These updates are crucial for preserving content integrity and safeguarding user data.

5️⃣ Google's Gemini AI in Gmail

Google introduces Gemini AI to Gmail with a sidebar feature that helps write and summarize emails. Boost your productivity with AI-assisted email management.

For the full issue you can check here:

You could have received this in full last Saturday!

In addition to helping me carry on with this project and allowing me to bring you the best possible content.

🧑🎓Are you a student?

Contact me to receive a special offer: david@mlpills.dev