💊 Pill of the week

Retrieval-Augmented Generation (RAG) is reshaping traditional search and AI-driven information retrieval systems by combining the power of retrieval and generation models. However, RAG systems often face challenges when scaling to large data repositories or handling diverse queries. A basic RAG system, which typically relies on semantic search alone, can struggle with both precision and efficiency. This article explores the use of hybrid search—combining sparse (keyword-based) and dense (vector-based) retrieval—to address these limitations, diving into the technical intricacies, practical implementation, and potential challenges.

This article is the first in a two-part series on optimizing RAG. Next week, we'll delve into another critical optimization technique: reranking. Stay tuned for the follow-up article, "Enhancing RAG with Reranking," which will focus on refining retrieval results to ensure the most relevant documents are prioritized for response generation.

Understanding RAG

RAG operates in two main stages:

Retrieval: The system retrieves relevant documents or passages from an external knowledge base based on an input query.

Generation: These retrieved documents are used as context to generate a response.

The standard RAG system typically uses a dense retriever—often based on a bi-encoder architecture that learns to embed queries and documents into the same vector space—and a sequence-to-sequence generator (such as a transformer). While the dense retriever excels at capturing semantic meaning, it can struggle with precise term matching, making it less reliable in situations where exact phrases, rare words, or domain-specific terminology are essential.

This limitation makes hybrid search a natural solution for improving RAG’s retrieval accuracy and efficiency.

If you want to learn more about RAG and how to build it, check these two previous issues:

Challenges in RAG Optimization

Quality of Retrieved Documents: Irrelevant documents degrade the performance of the generator, leading to unhelpful or incorrect responses.

Relevance Ranking: The ordering of retrieved documents matters. If the most relevant documents are not prioritized, the generator’s output will be suboptimal.

Scalability: With growing data, balancing retrieval speed with result quality becomes a critical issue.

Domain Specificity: RAG systems often require domain-specific fine-tuning to handle specialized queries effectively. Dense retrieval alone may not suffice in these cases, making hybrid search a valuable alternative.

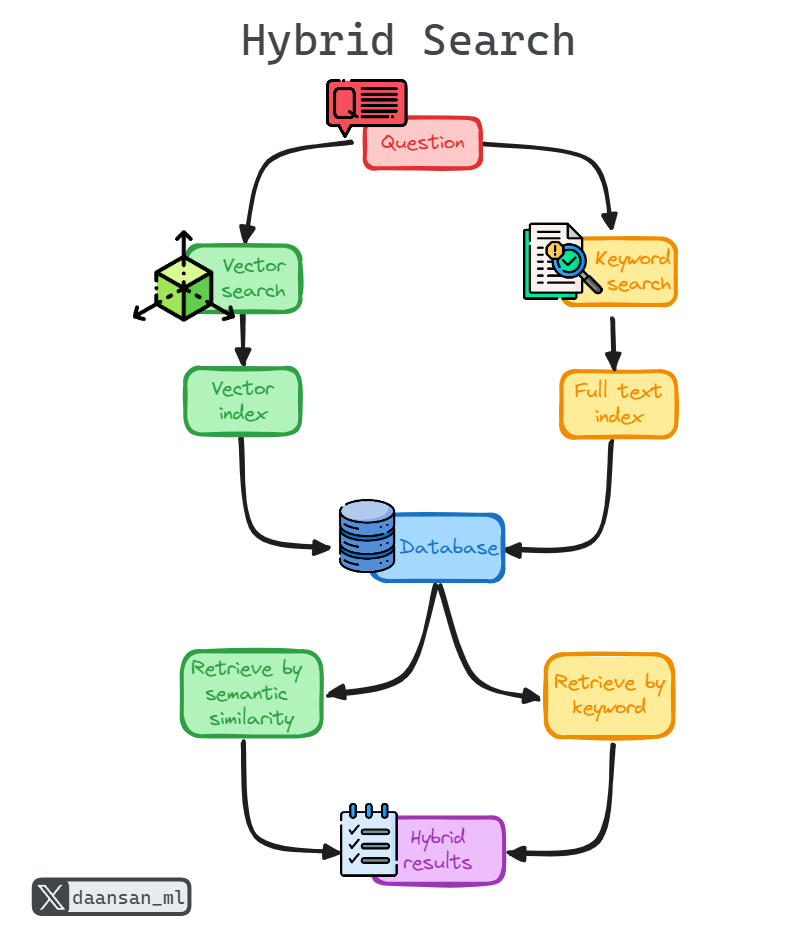

Hybrid Search

Hybrid search combines two key retrieval methods: sparse (keyword-based) and dense (embedding-based) retrieval. Each has its strengths and weaknesses, and hybrid search leverages the best of both worlds.

Sparse Retrieval (Keyword Search)

Sparse retrieval, commonly implemented through algorithms like BM25, ranks documents based on keyword frequency and rarity. BM25 works well for exact keyword matching, making it effective in retrieving documents with specific terms. However, it fails to capture the deeper semantic relationships between words, so it may miss documents that are contextually relevant but do not contain the exact query terms.

BM25 ranks documents based on:

Term Frequency: The more a query term appears in a document, the more relevant it is considered.

Inverse Document Frequency: The fewer documents contain a query term, the more weight it carries.

This method shines in cases where the query includes rare terms, specific phrases, or domain-specific keywords but may struggle to retrieve documents that convey the same meaning with different words.

Dense Retrieval (Vector Search)

Dense retrieval involves embedding both the query and documents into vectors in a high-dimensional space, with the goal of capturing their underlying semantics. Common methods like cosine similarity measure how close the query and document vectors are, indicating their relevance. Dense retrieval excels in finding semantically related content even when exact word matches are not present.

However, dense retrieval can fall short in cases where specific terms, rare words, or names are critical. Since embeddings prioritize semantic closeness, they may rank documents higher that are contextually similar but miss exact term matches.

Advantages of Hybrid Search

Hybrid search combines the strengths of sparse and dense retrieval methods:

Improved Accuracy: Combining keyword precision from sparse retrieval with the semantic understanding of dense retrieval results in more relevant document retrieval.

Contextual and Specific Matching: Hybrid systems handle both semantic similarity and exact term matching, improving performance on queries that require specific term recognition, such as abbreviations or technical jargon.

Flexibility: The combination enables the system to perform well across a broader range of query types, from specific fact-based queries to more open-ended or context-based ones.

Limitations of Hybrid Search

While hybrid search offers notable advantages, it comes with some challenges:

Increased Latency: Running both sparse and dense retrieval in parallel may lead to slower response times.

Computational Expense: Hybrid models are more computationally demanding, as they require the maintenance and execution of both sparse and dense indexes.

Implementation Complexity: Merging results from different retrieval methods can be non-trivial, requiring custom fusion techniques or weighted scoring mechanisms.

Implementation of Hybrid Search

Implementing hybrid search involves the following steps:

Keyword Search: Use sparse retrieval methods like BM25 to score documents based on term frequency and inverse document frequency.

Vector Search: Perform dense retrieval using techniques such as cosine similarity on vector embeddings of the documents and query.

Result Fusion: Combine results from both retrieval methods using techniques such as weighted fusion:

H = (1−α)⋅K + α⋅V

where:

H is the hybrid score,

K is the keyword search score,

V is the vector search score,

α is a weighting parameter that balances the contribution of sparse and dense retrieval.

Alternative fusion techniques, like Reciprocal Rank Fusion (RRF), can also be used to combine results.

Performance Considerations

Accuracy

By leveraging both sparse and dense retrieval, hybrid systems can capture both exact matches and semantically related content, significantly improving the overall accuracy of RAG systems.

Efficiency

Careful optimization, such as caching frequently accessed vectors or documents, can mitigate the increased latency from running dual search algorithms. Fine-tuning the weight parameter α\alphaα also helps maintain a balance between precision and recall.

Scalability

Hybrid retrieval methods scale better than dense-only systems, especially in large datasets. The computational burden of dense vector search can be reduced by using sparse retrieval as a first-pass filter.

Memory Usage

Hybrid systems may require more memory due to the need to maintain both inverted indexes for sparse retrieval and vector indexes for dense retrieval.

Conclusion

Hybrid search offers a powerful solution for optimizing RAG systems by blending the exactness of sparse retrieval with the semantic richness of dense retrieval. The ability to match both keywords and concepts improves retrieval accuracy and ensures that relevant documents are prioritized, even in large or domain-specific datasets. While hybrid search comes with additional computational and implementation complexities, its benefits in terms of accuracy and flexibility often outweigh the costs.

In our next article, we'll explore how reranking can further enhance the relevance of retrieved documents by intelligently reordering results from hybrid search. Look out for "Enhancing RAG with Reranking" next week!

📖 Book of the week

This week we recommend “Generative AI Application Integration Patterns: Integrate large language models into your applications”, by Juan Pablo Bustos and Luis Lopez Soria.

The book is aimed at developers, tech leads, and AI enthusiasts looking to integrate Generative AI (GenAI) into their applications. It is also suitable for AI practitioners eager to learn real-world techniques for optimizing GenAI models and minimizing hallucinations.

Why you should read it?

Master GenAI tools and strategies: Get hands-on experience with the most essential tools and design patterns to build robust GenAI-powered applications.

Minimize model hallucinations: Learn how to interact with and fine-tune GenAI models to tailor their behavior, ensuring accurate and reliable outputs.

End-to-end AI integration: Follow an easy-to-implement 4-step framework for integrating GenAI into applications, covering ideation, data prep, deployment, and monitoring.

Apply GenAI across use cases: Discover blueprints for common tasks like summarization, intent classification, and question-answering using strategies such as retrieval-augmented generation (RAG) and chain-of-thought reasoning.

Ethical AI development: Explore responsible AI practices, including techniques for bias mitigation, data privacy, and transparency, ensuring compliance with ethical standards.

Stay ahead with the latest models: Keep up with advances in cutting-edge models like GPT-4, DALL-E, Google Gemini, and Anthropic Claude, and learn how to harness their potential in your applications.

⚡Power-Up Corner

Are you ready to take your RAG system from good to exceptional? In this exclusive section, we're pulling back the curtain on advanced techniques that can dramatically enhance your RAG's performance, accuracy, and efficiency.

Imagine a RAG system that:

Adapts to diverse query types, from technical jargon to conceptual explorations

Retrieves results at lightning speed

Continuously learns and improves from each interaction

Balances precision and recall

These achievable realities with the right approach to Hybrid Search. Our curated set of practical recommendations draws from years of industry experience, cutting-edge research, and real-world implementations.

Keep reading with a 7-day free trial

Subscribe to Machine Learning Pills to keep reading this post and get 7 days of free access to the full post archives.