💊 Pill of the Week

Welcome back! In Part 1, we explored the foundational concepts of classification — from binary and multi-class setups to essential metrics like accuracy, precision, recall, and specificity. If you missed it, you can catch up here:

Now, it’s time to level up.

In this continuation, we’ll move beyond the basics and dive into advanced classification metrics — tools that provide deeper insights, especially in complex or imbalanced datasets. These metrics are critical when evaluating models in real-world scenarios, where simple accuracy often doesn’t tell the full story.

Whether you’re working in healthcare, fraud detection, or document classification, understanding these metrics will help you make smarter choices, tune your models more effectively, and deliver outcomes that matter.

Let’s get started.

Recap of Classification Basics

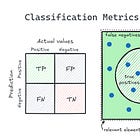

As we covered in Part 1, classification problems can be binary (two classes), multi-class (more than two mutually exclusive classes), or multi-label (samples can belong to multiple classes). The confusion matrix gives us True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN), which form the foundation for all classification metrics.

Basic metrics include:

Accuracy: (TP + TN) / (TP + TN + FP + FN)

Precision: TP / (TP + FP)

Recall (Sensitivity): TP / (TP + FN)

Specificity: TN / (TN + FP)

Now, let's explore more sophisticated metrics and approaches.

Advanced Classification Metrics

F1-Score

The F1-score is the harmonic mean of precision and recall, a special case of the F-Beta score with β=1:

The F1-score provides a single metric that balances precision and recall when they are equally important. It's particularly valuable when working with imbalanced datasets where accuracy might be misleading. Unlike the arithmetic mean, the harmonic mean penalizes extreme values, ensuring that high F1-scores are only achieved when both precision and recall are high.

For example, in document classification or information retrieval systems, the F1-score helps ensure that the model both finds most relevant documents (high recall) and that most retrieved documents are relevant (high precision).

G-Mean (Geometric Mean)

The G-Mean calculates the geometric mean of sensitivity and specificity:

G-Mean is particularly useful when balanced performance across both positive and negative classes is desired, especially with highly imbalanced datasets. Unlike the F1-score, which focuses on the positive class through precision and recall, G-Mean explicitly considers performance on the negative class through specificity.

This metric is valuable in scenarios like fraud detection where the dataset might contain very few fraudulent transactions (positives) compared to legitimate ones (negatives), but where performance on both classes matters.

ROC Curve and AUC

The Receiver Operating Characteristic (ROC) curve plots the True Positive Rate (Sensitivity) against the False Positive Rate (1-Specificity) at various classification thresholds. Instead of committing to a single threshold, the ROC curve visualizes performance across all possible thresholds.

The Area Under the Curve (AUC) summarizes the model's performance across all thresholds into a single value between 0 and 1. An AUC of 0.5 suggests no discriminative ability (equivalent to random guessing), while an AUC of 1.0 indicates perfect classification.

ROC-AUC is particularly useful in:

Comparing different models without having to select a specific threshold

Applications where the optimal threshold might change over time

Scenarios where different operating conditions might require different precision-recall trade-offs

However, ROC curves can be overly optimistic with highly imbalanced datasets, where the precision-recall curve often provides a more informative view.

Precision-Recall Curve

Similar to the ROC curve, the Precision-Recall curve plots precision against recall at various classification thresholds. The area under the precision-recall curve provides a summary of model performance focused on the positive class.

Precision-Recall curves are particularly useful when:

Working with highly imbalanced datasets where the positive class is rare but important

The negative class is large and less interesting than the positive class

You want to focus on the trade-off between precision and recall specifically

For example, in information retrieval or document classification, the precision-recall curve helps visualize how retrieving more documents (increasing recall) affects the proportion of relevant results (precision).

📖 Book of the Week

If you're a Tableau professional ready to level up your skills — whether in enterprise BI, consulting, or data platform strategy — you need to check this out:

“Tableau Cookbook for Experienced Professionals”

By Pablo Sáenz de Tejada & Daria Kirilenko

This book isn’t just a reference — it’s a tactical guide to building robust, secure, and high-performing Tableau environments. From performance tuning to API automation and cloud-scale governance, this is the real-world toolkit senior BI pros need today.

What sets it apart?

It bridges daily dashboards and enterprise systems — helping you turn Tableau into a strategic analytics platform:

✅ Build advanced data models and interactive dashboards

✅ Master LOD expressions, spatial joins, and zone visibility

✅ Extend Tableau with APIs, TabPy, and developer tools

✅ Secure your data and content with best practices for cloud and server

✅ Learn the latest features in Tableau 2025.1, including the Content Migration Tool

This is a must-read for:

📊 Tableau developers and consultants

🛠 BI admins and engineers

🏢 Data platform leads

🔍 Analysts working across complex, governed environments

If you’re ready to push Tableau beyond basic dashboards — and drive transformation at scale — this book is for you.

⚡Power-Up Corner

Extending to Multi-class Problems

For multi-class classification problems, the binary metrics can be extended using different averaging methods:

Keep reading with a 7-day free trial

Subscribe to Machine Learning Pills to keep reading this post and get 7 days of free access to the full post archives.