Issue #69 - The life cycle in a Data Science project

💊 Pill of the Week

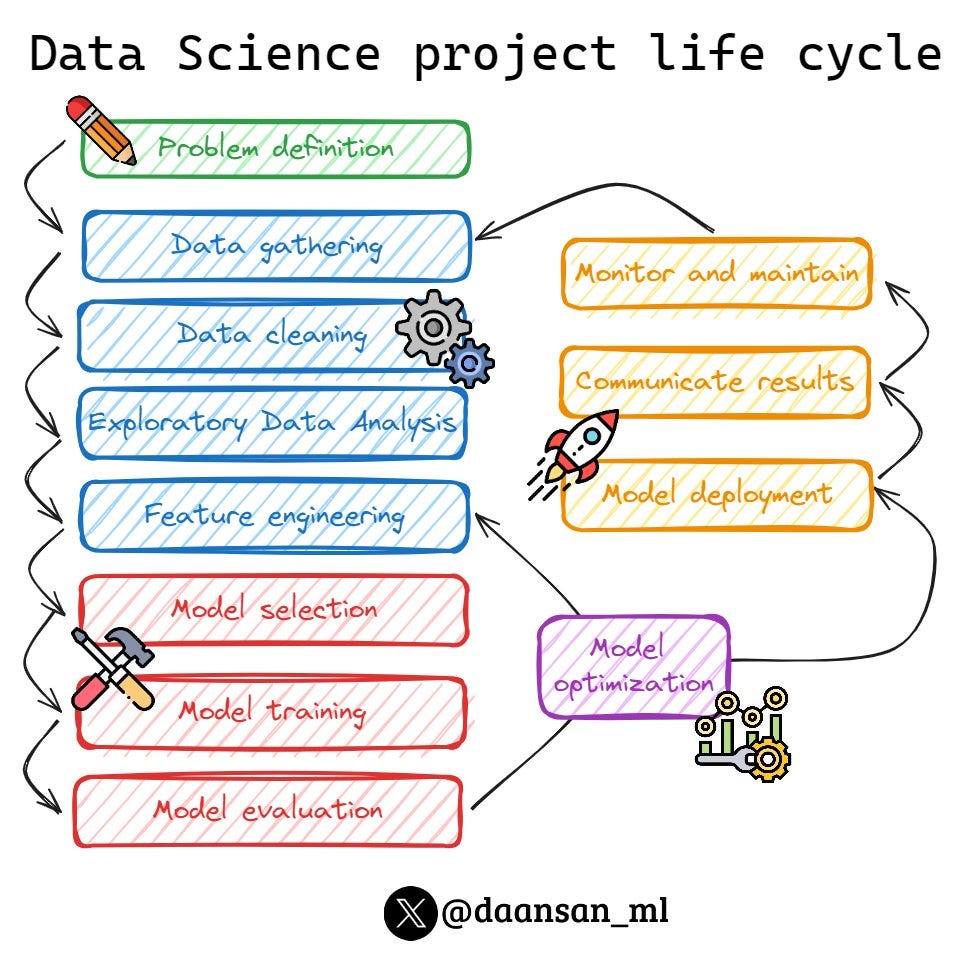

Data Science has become an integral part of decision-making processes across various industries. Understanding the fundamental steps of a Data Science project is crucial. This MLPills issue will guide you through the 12 essential steps that form the backbone of any successful Data Science project.

But before starting…

🚨Exciting Internship Opportunity in Data Science

A forward-thinking company is offering an internship (unpaid for now), with the possibility of future employment and company shares down the line. They are looking for a data scientist or an aspiring data scientist eager to develop their skills in cutting-edge AI technologies, including Large Language Models (LLMs), Retrieval-Augmented Generation (RAG), intelligent agents, and LangChain. This is a unique opportunity to contribute to the development of the next generation of CRM systems where AI will play a crucial role.

If you're passionate about AI and ready to grow and learn, please send an email to pol@daialog.app with david@mlpills.dev in CC.

Let’s now start with the content of this issue!

1. Define the Problem or Question to be Answered

The first and most critical step in any Data Science project is to clearly articulate the problem you aim to solve or the question you want to address. This step sets the foundation for the entire project and determines its direction.

Key aspects of this step include:

Collaborating with stakeholders to understand their needs and expectations

Defining specific, measurable objectives

Identifying key performance indicators (KPIs) that will measure success

Ensuring alignment between the project goals and broader business objectives

A well-defined problem statement might look like this: "How can we reduce customer churn by 20% in the next quarter using predictive modeling?"

2. Gather and Understand the Data

Once the problem is defined, the next step is to collect relevant data and gain a thorough understanding of its structure, quality, and potential limitations.

This step involves:

Identifying data sources (internal databases, external APIs, public datasets, etc.)

Assessing data availability and accessibility

Understanding data formats and structures

Evaluating data quality and completeness

Documenting data lineage and any known biases or limitations

It's crucial to gather a comprehensive dataset that covers all aspects of the problem you're trying to solve. This might include historical customer data, transaction logs, demographic information, or any other relevant data points.

3. Prepare and Clean the Data

Data preparation and cleaning is often the most time-consuming part of a Data Science project, but it's essential for ensuring the reliability and quality of your analysis.

Key tasks in this step include:

Handling missing values (imputation, deletion, or other techniques)

Removing duplicates

Detecting and addressing outliers

Standardizing data formats and units

Correcting inconsistencies and errors in the data

For example, you might need to convert all currency values to a single currency, standardize date formats, or handle missing customer information in a consistent manner.

4. Perform Exploratory Data Analysis (EDA)

Exploratory Data Analysis is a crucial step that allows you to gain insights, identify patterns, and uncover relationships between variables in your dataset.

EDA typically involves:

Calculating summary statistics (mean, median, standard deviation, etc.)

Visualizing data distributions using histograms, box plots, and scatter plots

Identifying correlations between variables

Detecting anomalies or interesting patterns in the data

Formulating hypotheses based on observed patterns

Tools like Python's Matplotlib, Seaborn, or Plotly can be invaluable for creating insightful visualizations during this phase. For example, identifying churn patterns, such as the impact of late payments on churn.

5. Engineer Relevant Features

Feature engineering involves transforming existing data or creating new features to enhance the predictive power of your models.

This step may include:

Creating interaction terms between existing features

Binning continuous variables into categorical ones

Applying mathematical transformations (log, square root, etc.)

Encoding categorical variables (one-hot encoding, label encoding, etc.)

Extracting relevant information from complex data types (e.g., text or datetime)

For instance, in a customer churn prediction model, you might create a new feature that represents the ratio of complaints to total interactions, which could be a strong predictor of churn risk.

Quick stop to get your opinion…

6. Select Appropriate Modeling Techniques

Choosing the right modeling approach is crucial for the success of your project. The selection depends on various factors, including the nature of your problem, the type of data you have, and the desired outcomes.

Considerations for model selection include:

The type of problem (classification, regression, clustering, etc.)

The size and complexity of your dataset

The interpretability requirements of your model

The computational resources available

The trade-off between model complexity and performance

Common modeling techniques include linear regression, decision trees, random forests, support vector machines, and neural networks, among others.

7. Train the Models Using the Prepared Data

With your modeling approach selected, the next step is to train your models using the prepared data.

This process typically involves:

Splitting your data into training and testing sets

Initializing the model with appropriate parameters

Feeding the training data into the model

Adjusting the model's internal parameters to minimize prediction errors

Implementing cross-validation techniques to ensure robustness

It's important to keep track of different model versions and their respective performances during this phase.

8. Evaluate the Model's Performance

After training, it's crucial to assess how well your models perform. This evaluation helps you understand the model's strengths and weaknesses and guides further refinement.

Key aspects of model evaluation include:

Selecting appropriate evaluation metrics (accuracy, precision, recall, F1-score, RMSE, etc.)

Comparing model predictions against known outcomes in the test set

Analyzing the model's performance across different subgroups or segments of data

Identifying areas where the model performs well or poorly

Visualizations like confusion matrices, ROC curves, or residual plots can be helpful in understanding model performance.

9. Refine and Tune the Models for Better Results

Based on the evaluation results, you may need to refine and tune your models to improve their performance.

This step may involve:

Adjusting hyperparameters using techniques like grid search or random search

Trying different algorithms or ensemble methods

Applying regularization techniques to prevent overfitting

Incorporating feature selection methods to focus on the most important variables

Iterating on feature engineering based on model insights

The goal is to find the optimal balance between model complexity and performance, avoiding both underfitting and overfitting.

10. Deploy the Finalized Model into a Production Environment

Once you have a satisfactory model, the next step is to deploy it into a production environment where it can be used to make real-world predictions or decisions.

Deployment considerations include:

Ensuring the model's scalability and efficiency

Setting up appropriate infrastructure (cloud services, on-premise servers, etc.)

Implementing monitoring systems to track the model's performance

Establishing data pipelines for real-time or batch predictions

Ensuring compliance with relevant regulations and data privacy laws

Tools like Docker, Kubernetes, or cloud-based ML platforms can be helpful in streamlining the deployment process.

11. Communicate the Findings and Results to Stakeholders

Effective communication of your findings is crucial for the success and adoption of your Data Science project.

Key aspects of this step include:

Preparing clear and concise reports or presentations

Creating compelling visualizations to illustrate key insights

Translating technical results into business-friendly language

Providing actionable recommendations based on your findings

Addressing potential questions or concerns from stakeholders

Remember, the most sophisticated model is of little value if its insights aren't effectively communicated to decision-makers.

12. Monitor and Maintain the Deployed Model

The work doesn't end with deployment. Continuous monitoring and maintenance are essential to ensure your model remains accurate and relevant over time.

This final step involves:

Regularly evaluating the model's performance on new data

Monitoring for concept drift (changes in the underlying data patterns)

Collecting feedback from users and stakeholders

Periodically retraining the model with new data

Updating the model as business needs or data characteristics change

It could involve continuously tracking the prediction accuracy and retrain the model as customer behavior changes.

By following these 12 steps, you can ensure a comprehensive and structured approach to your Data Science projects. Remember, while these steps provide a solid framework, the specific details and emphasis may vary depending on the nature of your project and organizational context. Flexibility and adaptability are key to navigating the complex landscape of Data Science successfully.

🌎Real-World example

Before finishing let’s walk through a concrete example of how to apply these 12 essential steps of a Data Science project to a real-world business challenge: predicting and reducing customer churn for a telecom company.

You’ll see how each step—from defining the problem to deploying the final model—is crucial in creating a reliable churn prediction system that helps the company retain more customers. This illustrative example is easy to follow, with no code involved, making it accessible to all.

However, we will send this on Wednesday ⚠️ONLY for our paid subscribers⚠️

For our paid subscribers, we offer a detailed, step-by-step guide that dives deeper into each phase of the project. You'll gain insights into the specific techniques and tools used, along with practical tips on implementation. This comprehensive explanation is designed to help you apply these strategies effectively in your work, even if you're not coding.

Here’s a very brief outline of what you’ll get:

Define the Problem: Predict and reduce telecom customer churn by 20% next quarter.

Gather Data: Collect customer demographics, usage, billing, and support interactions.

Prepare Data: Clean data by handling missing values, standardizing formats, and removing duplicates.

Exploratory Data Analysis (EDA): Identify churn patterns, such as the impact of late payments on churn.

Feature Engineering: Create features like the ratio of customer complaints to total interactions.

Select Models: Choose a random forest classifier to predict customer churn.

Train Models: Split data into training/testing sets and train the random forest model.

Evaluate Performance: Measure accuracy, precision, and recall to assess churn prediction.

Refine Models: Tune model hyperparameters and use ensemble methods to improve prediction accuracy.

Deploy Model: Implement the churn prediction model on a cloud platform for real-time analysis.

Communicate Results: Present churn factors and actionable retention strategies to stakeholders.

Monitor Model: Continuously track prediction accuracy and retrain the model as customer behavior changes.

⚠️It will NOT be accessible for anyone subscribed (paid subscriber) after this Wednesday (14th August 2024).

Subscribe here to receive it next Wednesday 👇

Keep reading with a 7-day free trial

Subscribe to Machine Learning Pills to keep reading this post and get 7 days of free access to the full post archives.