Issue #71 - LangChain's text processing methods I

Stuff and Map Reduce

Welcome to the issue number 71 of Machine Learning Pills! Today we introduce a new section: ⚡Power-Up Corner! Here we bring you cutting-edge tips, advanced strategies, and practical insights to supercharge your workflows.

Whether you're looking to master the latest tools, streamline your processes, or unlock new performance potentials, Power-Up Corner is your go-to resource for actionable knowledge and expert advice related to our main section: Pill of the Week. Stay tuned for each issue’s latest power moves and get ready to elevate your game!

Table of Contents:

Before continuing a brief poll:

Thanks!

Let’s finally start!

💊 Pill of the week

LangChain provides a rich set of tools to effectively manage and process text. One of the key challenges when working with LLMs is handling varying sizes of text input. Whether you're dealing with short paragraphs or entire documents, LangChain offers several chain methods to manage and process text efficiently.

These methods—Stuff, Map Reduce, Map Rerank, and Refine—each serve unique purposes and are tailored to specific scenarios. This issue explores the first two methods in detail, providing insights into their applications, strengths, and potential limitations.

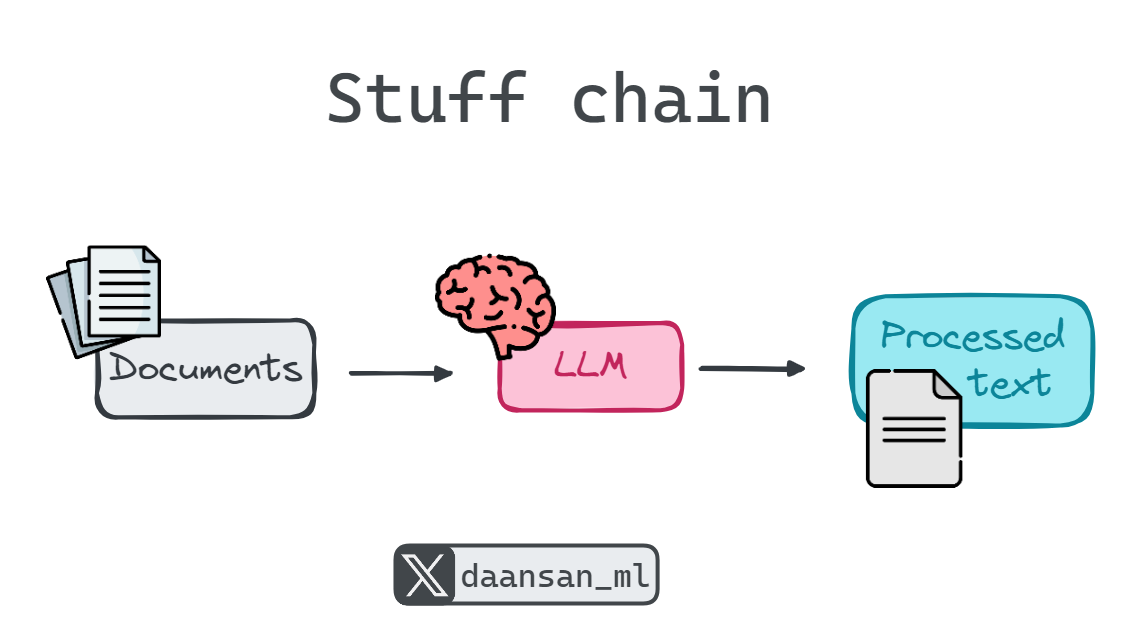

1. Stuff Chain

The Stuff chain is the simplest and most straightforward method of handling text in LangChain. This approach involves concatenating all the input texts into a single string, which is then passed to the language model in one go. The model processes this combined input and returns a single output.

When to Use Stuff Chain

The Stuff chain is ideal when:

The total size of the combined text is within the model's token limit.

The texts to be processed are relatively small and can be easily handled in a single batch.

There is a need for simplicity and speed in processing.

The task requires preserving the full context of the input.

Strengths of Stuff Chain

Simplicity: It is easy to implement and understand. You simply "stuff" all the text into one input and let the model handle it.

Speed: Since the model processes the text in a single pass, it is typically faster than other methods that require multiple steps.

Context Preservation: The model has access to the entire input at once, allowing it to maintain full context.

Limitations of Stuff Chain

Token Limit: The primary limitation of the Stuff chain is the token limit imposed by LLMs. If the combined input exceeds the model's token limit, it cannot process the text effectively, leading to truncation or errors.

Memory Intensity: For very large inputs, this method can be memory-intensive, as it requires holding the entire input in memory.

2. Map Reduce Chain

The Map Reduce chain is a more sophisticated method that breaks down the input text into smaller chunks, processes each chunk independently, and then aggregates the results. This approach is inspired by the MapReduce programming model commonly used in distributed computing.