RW #5 - No-Code Customer Service agent with LangFlow

How to create AI agents that think, retrieve, and act with your business knowledge

💊 Pill of the Week

Imagine a customer service operation where AI agents don't just rely on generic responses, but actually understand the business inside and out. Agents that can instantly access FAQs, product manuals, and policies—but only when they need to. This is the reality that Retrieval-Augmented Generation (RAG) combined with ReAct (Reasoning + Acting) agents brings to modern customer service.

This issue explores how to build using LangFlow sophisticated AI systems that transform customer inquiry handling. Whether managing product support, policy questions, or technical assistance, this approach revolutionizes customer service operations across industries.

The Foundation

Before diving into implementation, let's establish the theoretical foundation that makes these intelligent agents possible.

What is RAG (Retrieval-Augmented Generation)?

RAG is a technique that helps language models become smarter by giving them access to external information—kind of like letting the model "look things up" before answering a question.

Normally, language models like GPT are limited to what they learned during training. They don't know anything that happened afterward, and they can sometimes "hallucinate" facts. RAG helps fix that. It works by combining two things: a search system that finds relevant information (retrieval), and a language model that uses that information to generate a response (generation).

Here's how it works in practice: when a customer asks a question, the system first searches through a collection of documents or data to find the most relevant pieces. Then it passes those results to the language model, which uses them as context to answer the question. So the answer isn't just based on memory—it's grounded in real, retrieved content.

This setup allows RAG to be more accurate, especially for niche or up-to-date topics. Some systems even go further by showing where the information came from or using multiple search steps for complex queries. Of course, it's not perfect—too much information can overwhelm the model, and hallucinations can still happen—but overall, it's a big step toward more trustworthy AI systems.

LangFlow: Making AI Workflows Visual and Accessible

LangFlow is a visual, no-code platform that transforms complex AI workflows into intuitive drag-and-drop interfaces. Think of it as the "Figma for AI systems"—instead of writing hundreds of lines of code to connect language models, databases, and APIs, you build sophisticated AI agents by connecting components visually. Each component represents a specific function (like loading documents, creating embeddings, or querying databases), and you connect them with simple lines to create the data flow.

What makes LangFlow particularly powerful for RAG systems is how it handles the complexity behind the scenes. Setting up a traditional RAG pipeline involves managing multiple APIs, handling data transformations, coordinating between different AI models, and ensuring everything works together seamlessly. LangFlow abstracts away this complexity while still giving you full control over each component's behavior and configuration.

For businesses, this means the difference between needing a team of AI engineers and being able to prototype and deploy intelligent agents with existing technical staff. The visual approach also makes it easier to understand, modify, and troubleshoot these systems—you can literally see how information flows from customer questions through document retrieval to final responses.

Now that we understand how LangFlow simplifies AI workflows, let’s walk through the architecture of a typical customer service agent built with this tool.

The Architecture

Now that we understand what RAG means in practice, let's explore how these concepts come together in a practical LangFlow implementation.

1. Document Foundation

Before an AI agent can answer questions intelligently, it must first understand how information is organized. Raw documents—whether FAQs, product manuals, or policies—aren’t optimized for retrieval. That’s where chunking comes in. It transforms unstructured or semi-structured content into digestible, context-aware units that balance retrieval accuracy, performance, and storage tradeoffs. The goal isn’t just to split documents—it’s to preserve meaning and relevance.

Chunking isn't just about size limits—it's about optimizing information density and retrieval relevance. Sophisticated approaches include:

Semantic Chunking: Split based on topic boundaries rather than character counts

Hierarchical Chunking: Create chunks at multiple granularities (paragraph, section, document)

Overlapping Windows: Sliding windows with strategic overlap to maintain context

Metadata-Aware Chunking: Preserve document structure (headers, tables, lists) in chunk boundaries

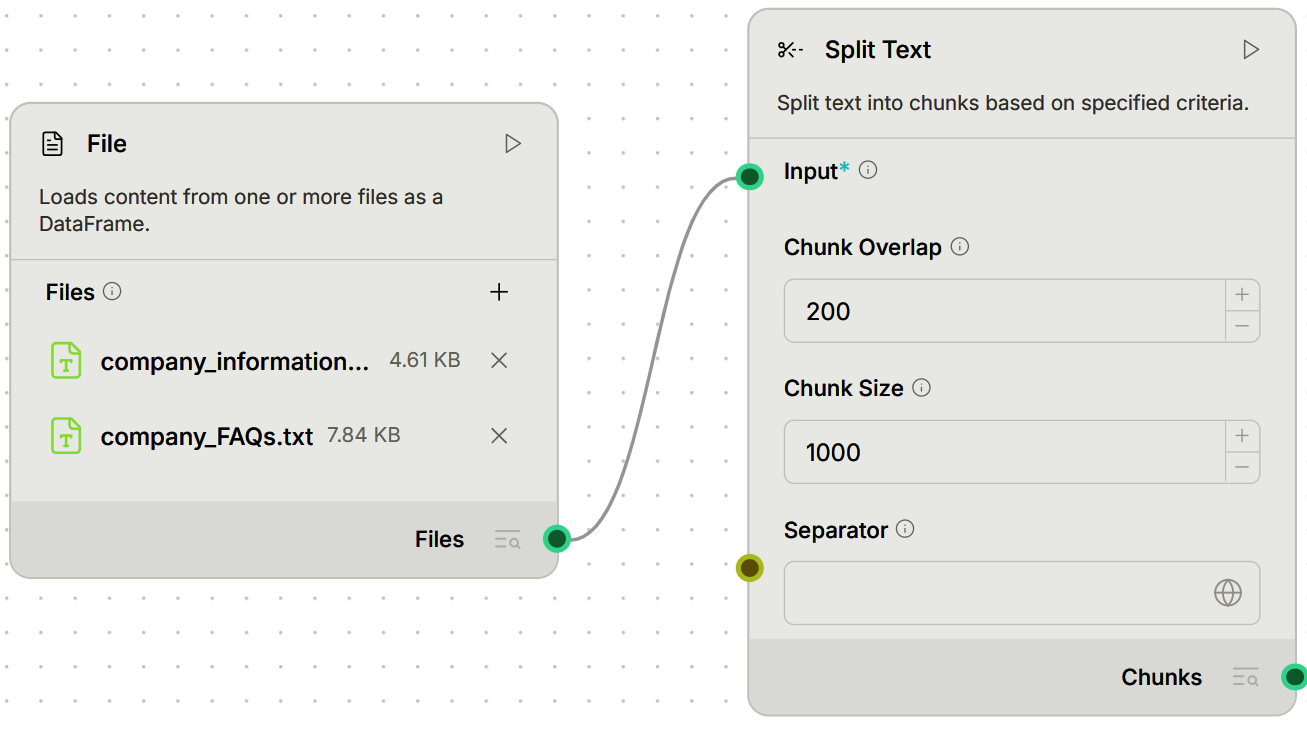

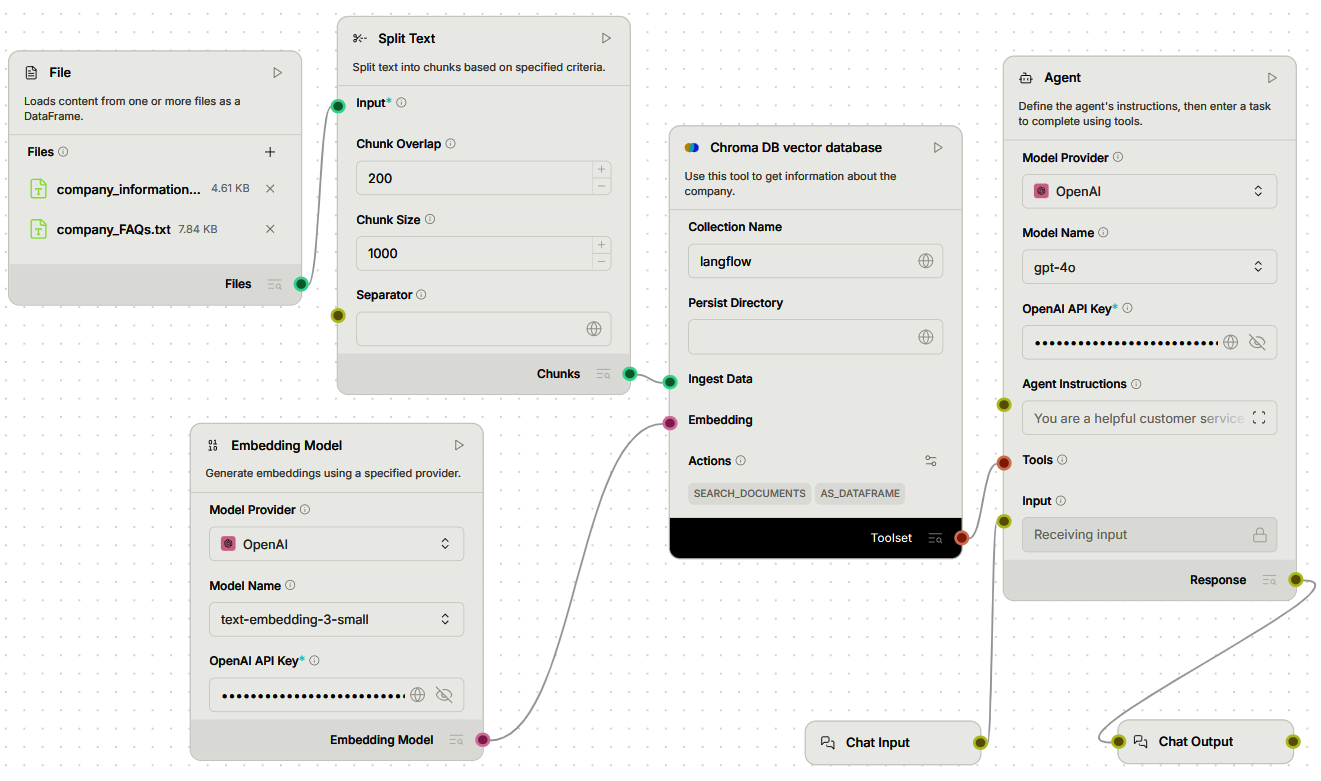

In LangFlow, this begins with a File Loader component connected to a Text Splitter. The configuration typically uses 1,000 characters with 200-character overlap, balancing context preservation with retrieval precision.

In this example we load two files: general company information and the FAQs in their website. Both are essential information that a customer may want to know when contacting a customer service bot.

Business Reality: A company's knowledge base includes FAQs, product manuals, policy documents, and troubleshooting guides. These documents vary in length and complexity—from short FAQ answers to comprehensive product specifications.

Performance Considerations:

Chunk Size vs. Precision: Smaller chunks increase precision but may lose context

Overlap vs. Storage: More overlap improves recall but increases storage requirements

Retrieval Granularity: Match chunk size to expected query complexity

2. Semantic Understanding

What Are Embeddings? Embeddings are how AI models represent meaning using numbers. Instead of just seeing words as symbols, embeddings let models understand how words, phrases, or documents relate to each other.

When a model processes a sentence, it turns it into a vector—a list of numbers that capture its meaning. Sentences with similar meanings get vectors that are close together. That's why embeddings are so useful in things like search, clustering, and recommendation—they let the AI measure semantic similarity.

Modern embeddings are contextual, which means the meaning of a word changes depending on where it appears—just like how "bank" means something different near "river" than it does near "money." And as models advance, embeddings can even represent text, images, and code in the same shared space, enabling powerful cross-modal applications.

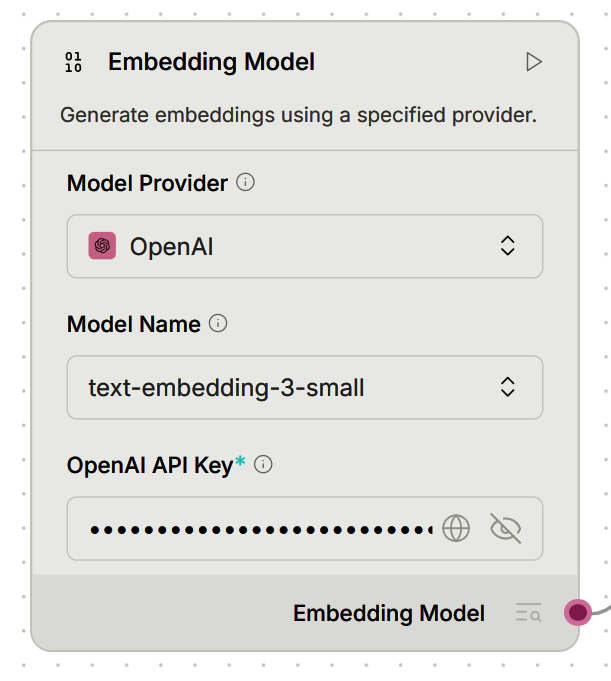

In LangFlow, document chunks feed into an Embedding Model component using OpenAI's text-embedding-3-small or similar. Each chunk transforms into a dense vector—a numerical representation capturing semantic meaning.

Real-World Impact: When customers ask about "product returns," "item refunds," or "sending back purchases," the embedding model recognizes these as semantically related concepts. The agent finds relevant information based on meaning, not just keyword matching.

3. Knowledge Storage

What is a Vector Database? A vector database is built for a very different kind of search—semantic search. Instead of matching keywords, it finds things that mean the same thing, even if they use different words. To do this, it stores information as vectors—large sets of numbers that capture the meaning of text, images, or other content.

For example, a sentence like "I love chocolate" might be turned into a 1,536-dimensional vector using an embedding model. Then, when you search for "favorite desserts," the database can find documents with similar meanings, even if the words don't exactly match.

Behind the scenes, it uses special data structures—like HNSW graphs—to find the closest vectors quickly, even in massive datasets. And to compare similarity, it relies on mathematical techniques like cosine similarity or Euclidean distance. This kind of search is at the heart of recommendation systems, chatbots, and any AI tool that needs to understand what content is about, not just how it's phrased.

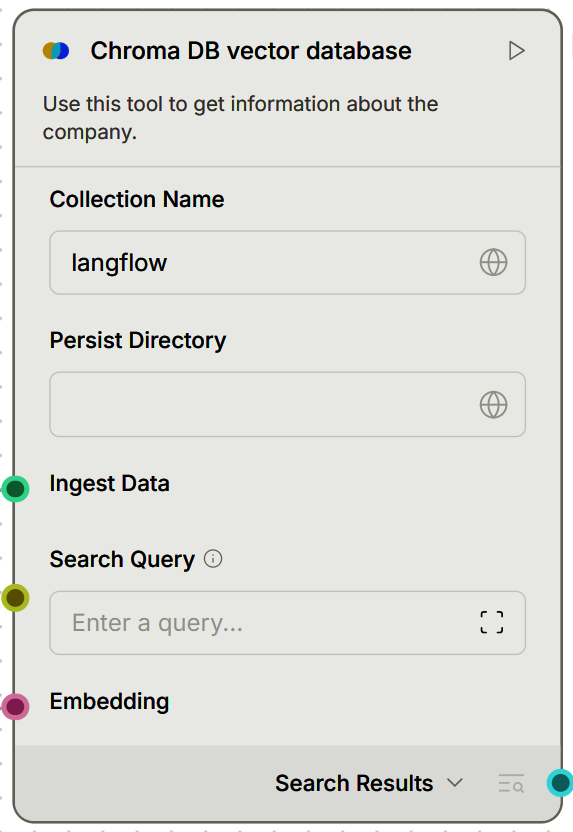

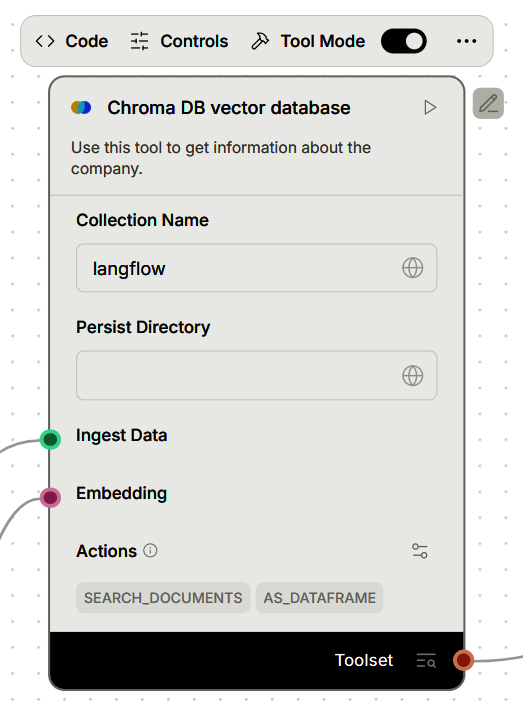

In LangFlow, ChromaDB is configured with a collection name and persistent storage directory. This creates the agent's knowledge repository that survives system restarts and builds intelligence over time.

Why did we choose ChromaDB? It is an ideal vector store for businesses because it runs locally for full data privacy and cost control, requires minimal setup without complex cluster management, supports persistent storage and multiple similarity metrics, and offers metadata filtering plus collection management for flexible, efficient, and secure retrieval workflows.

4. Tool Integration

Storing knowledge is only part of the equation—making it actionable is the next step.

What Are Tools in AI Systems? In the world of AI agents, tools are like superpowers. While a language model on its own can generate and understand text, tools let it interact with the world—run calculations, look up real-time info, call APIs, query a database, and more.

Each tool is a function with a clear structure: it has inputs, outputs, and a short explanation so the AI knows when to use it. When the agent needs to solve a problem, it can choose the right tool, use it, look at the results, and continue from there. This unlocks a whole new level of usefulness.

Advanced AI agents can even chain tools together to perform complex tasks, learn about new tools at runtime, or check if a tool call is valid before using it. It's a way of extending the model's reach—connecting reasoning with real-world capabilities.

In LangFlow, the critical step involves enabling "Tool Mode" on the ChromaDB component. This transforms the database from a static resource into a dynamic tool the agent can call, creating a discrete function called SEARCH_DOCUMENTS.

Why This Architecture Matters: Instead of always searching or never searching, the agent now makes intelligent decisions about when to use the tool, what parameters to pass, and how to interpret results.

5. The Reasoning Engine

What is a ReAct Agent? A ReAct agent is a type of AI that doesn't just generate answers—it thinks through problems and takes actions to solve them. The name stands for "Reasoning + Acting", and that's exactly how it works: the agent reasons about the situation, takes an action (like calling a tool or API), looks at the result, and then decides what to do next. This loop continues until it completes the task.

What makes ReAct agents powerful is their ability to adapt on the fly. They don't just follow a fixed script—they learn from what happens along the way. If a step fails, they can recognize it and try a different approach. They can even reflect on their own reasoning and revise it when needed. This makes them especially good at handling complex tasks that require multiple steps or tools, and they're more transparent too—you can follow their train of thought as they work.

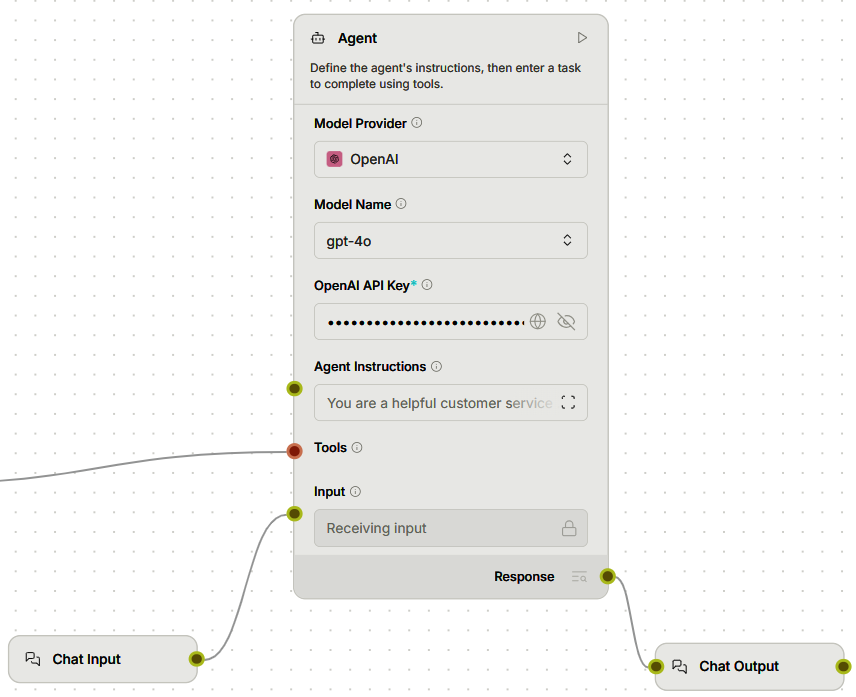

The Agent component in LangFlow uses a large language model (like GPT-4) with carefully crafted instructions: "Act as a helpful customer service representative. Use the SEARCH_DOCUMENTS tool when customers ask about specific products, policies, or procedures."

The Decision Process: For each customer query, the agent follows this pattern:

Analyze: What type of question is this?

Decide: Do I need to search company knowledge?

Act: Call the search tool with relevant terms

Synthesize: Combine retrieved information with clear explanation

Respond: Provide accurate, helpful customer service

Advanced Reasoning: The agent can chain multiple searches, refine its queries, and synthesize information from different sources—searching for product specifications, then pricing information, then warranty details to answer one comprehensive customer question.

6. Customer Experience

The Components: Chat Input and Chat Output create the conversational interface, but the real sophistication lies in how the system manages the entire interaction flow.

The Result: Customers interact with what feels like the company's most knowledgeable service representative—someone who has instant access to every piece of company information but only references what's relevant to their specific need.

We load company information and split it into chunks, then store them in a vector database. To enable efficient search, we use an embedding model connected to the vector database to convert text into numerical representations that capture meaning. We convert the vector database search into a tool so the LLM can use it to find relevant information when answering customer questions. Finally, we connect this search tool to the LLM along with chat input and output components, creating an intelligent customer service agent that can access company knowledge on-demand to provide accurate, helpful responses.

LangFlow is great for rapid prototyping, offering a visual and intuitive interface. However, it can limit the flexibility required for building more complex chatbots and advanced agent workflows—something LangGraph handles more effectively with its greater control and modularity. You can find an example of how to build a ReAct agent in LangGraph in the following previous issue:

🎓 Two Paths to Mastering AI*

👨💻 Build and Launch Your Own AI Product

Full-Stack LLM Developer Certification

Become one of the first certified LLM developers and gain job-ready skills:

Build a real-world AI product (like a RAG-powered tutor)

Learn Prompting, RAG, Fine-tuning, LLM Agents & Deployment

Create a standout portfolio + walk away with certification

Ideal for developers, data scientists & tech professionals

🎯 Finish with a project you can pitch, deploy, or get hired for

🛠️ 90+ hands-on lessons | Slack support | Weekly updates

💼 Secure your place in a high-demand, high-impact GenAI role

🔗 Join the August Cohort – Start Building

💼 Lead the AI Shift in Your Industry

AI for Business Professionals

No code needed. Learn how to work smarter with AI in your role:

Save 10+ hours/week with smart prompting & workflows

Use tools like ChatGPT, Claude, Gemini, and Perplexity

Lead AI adoption and innovation in your team or company

Includes role-specific modules (Sales, HR, Product, etc.)

✅ Build an “AI-first” mindset & transform the way you work

🔍 Learn to implement real AI use cases across your org

🧠 Perfect for managers, consultants, and business leaders

🔗 Start Working Smarter with AI Today

🎓 Both Courses Come With:

Certification

Lifetime Access & Weekly Updates

30-Day Money-Back Guarantee

Hands-On Projects, Not Just Theory

📅 August Cohorts Now Open — Don’t Wait

🔥 Invest in the skillset that will define the next decade.

*Sponsored: by purchasing any of their courses you would also be supporting MLPills.

Real-World Customer Service Scenario

Let's examine how this system handles typical customer service interactions:

Customer: "What's the return policy for electronics?"

Agent's Internal Process:

Reasoning: "This is a specific policy question requiring company knowledge"

Action: Calls

SEARCH_DOCUMENTSwith parameters targeting electronics return policiesObservation: Receives relevant policy chunks with metadata

Synthesis: Combines policy details with clear, customer-friendly explanation

Response: "For electronics, the return policy allows returns within 30 days of purchase with original packaging. Here are the specific steps..."

Customer: "My wireless headphones won't charge properly"

Agent's Internal Process:

Reasoning: "This is a technical issue requiring troubleshooting knowledge"

Action: Calls

SEARCH_DOCUMENTSwith "wireless headphones charging problems"Observation: Retrieves relevant troubleshooting procedures

Multi-step Planning: Identifies a sequence of diagnostic steps

Response: "I can help you with that charging issue. Let's try these troubleshooting steps in order..."

Customer: "Hi there, how are you?"

Agent's Internal Process:

Reasoning: "This is a social greeting—no company knowledge needed"

Direct Response: "Hello! I'm doing well, thank you for asking. How can I help you today?"

Notice how the agent adapts its behavior based on the customer's specific needs, demonstrating the power of ReAct architecture in real-world applications.

The Business Impact: Why This Matters

Keep reading with a 7-day free trial

Subscribe to Machine Learning Pills to keep reading this post and get 7 days of free access to the full post archives.