💊 Pill of the week

Deciding between a simple, rule-based system and a sophisticated machine learning (ML) model is a critical choice in software development. While it's tempting to jump to the latest AI, often a few well-written if-then statements are more effective. Here’s how to know when you truly need to make the leap to ML.

Your likes ❤️ and shares 🔄 fuel my work and help me keep bringing you the best content!

Thank you, David

The Power and Place of Simple Rules

A rule-based system operates on a set of handcrafted, deterministic logic. If a specific condition is met, a specific action is taken. Think of a simple email filter:

IF subject CONTAINS "you've won" THEN move to Spam.

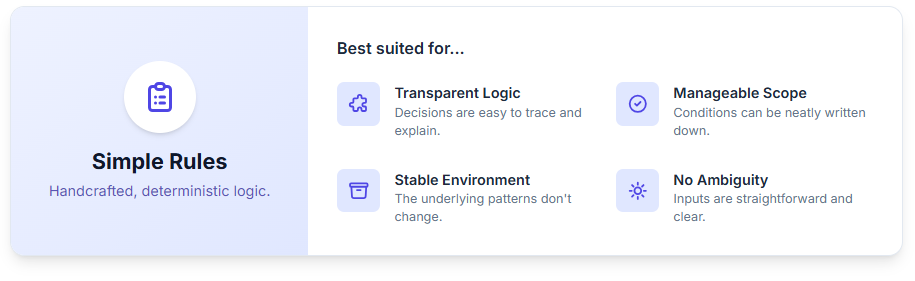

You should stick with simple rules when your problem has:

High Explainability: The logic is transparent. You can trace exactly why the system made a particular decision. This is crucial for applications like tax calculations or regulatory compliance.

A Manageable Number of Conditions: The logic can be described in a few dozen or even a few hundred

if-elsestatements without becoming a tangled mess.Deterministic and Stable Environment: The underlying patterns don't change. Password strength requirements, for example, are set by policy and don't evolve on their own.

No Need for Nuance: The inputs are straightforward and unambiguous. A transaction is either over $10,000 or it isn't; there's no gray area.

In short, if you can clearly write down the logic in a flowchart, simple rules are often your best bet. They are cheap to build, fast to run, and easy to debug.

When to Bring in Machine Learning

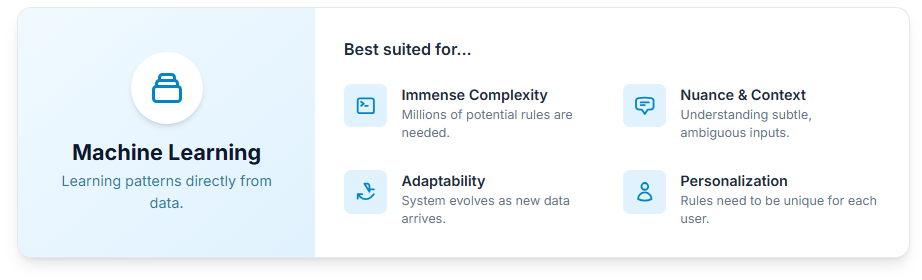

Machine Learning becomes necessary when the limitations of a rule-based system are exceeded. ML models learn patterns from data rather than being explicitly programmed. This makes them ideal for problems that are too complex, nuanced, or dynamic for manual rules.

You need ML when your problem involves:

Unmanageable Complexity: The number of potential

if-thenrules would be in the millions or billions. Consider identifying a cat in a photo. You can't write rules for every possible combination of pixels, lighting, and angles. An ML model, however, can learn the general "pattern" of a cat from thousands of examples.Handling Nuance and Ambiguity: Simple rules fail when context is key. Sentiment analysis is a classic example. The statement "This horror movie was sick!" is positive, but a rule-based system might flag the word "sick" as negative. An ML model trained on modern language can understand this nuance.

Adaptability and Scalability: The world changes, and your system needs to adapt. A spam filter with hardcoded rules will quickly become obsolete as spammers change their tactics. An ML model can be continuously retrained on new examples of spam to stay effective. This ability to learn from new data is ML's greatest strength.

Personalization: The "rules" need to be different for every user. A recommendation engine like Netflix's can't use a global set of rules. It builds a personalized model for each user based on their unique viewing history, a task impossible to manage with

if-thenlogic.Finding Hidden Patterns: Often, the most valuable insights are hidden in noisy data. ML is essential for tasks like fraud detection, where criminals are actively trying to disguise their behavior. A model can identify subtle, multi-variate patterns across thousands of transactions that no human could ever spot or code as a rule.

The New Frontier: LLMs and Agents

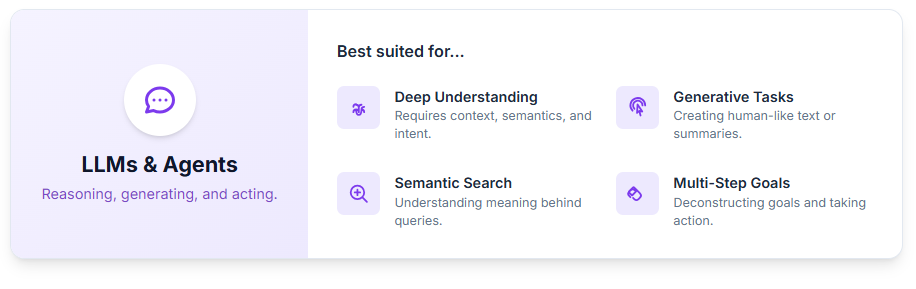

Large Language Models (LLMs) and agents built on top of them represent a major evolution in this paradigm. They don't just solve complex problems; they handle tasks that are fundamentally about reasoning, understanding, and generating human-like responses.

LLMs as Ultimate Pattern Recognizers

LLMs are a form of machine learning specifically designed for the most complex and nuanced data of all: unstructured language. You would use an LLM instead of rules or even traditional ML for tasks that rely on a deep understanding of context, semantics, and intent.

Summarizing a document: There are no simple rules for creating a good summary. It requires understanding the key topics, their relationships, and rephrasing them concisely.

Powering a chatbot: A rule-based chatbot is brittle and frustrating ("I'm sorry, I don't understand that."). An LLM-powered chatbot can handle a vast range of conversational topics and user intents.

Semantic search: Instead of just matching keywords (a rule), an LLM can understand the meaning behind a query. Searching for "movies about fighting a corrupt government" can return V for Vendetta, even if those exact words don't appear in the description.

Agents: Moving from Prediction to Action

Agents are systems that use LLMs as their "brain" to make decisions and take actions. They are the ultimate escape from a fixed set of rules. An agent can be given a high-level goal, and it will reason about the steps needed to achieve it.

For example, a rule-based system for booking travel might look like this:

IF destination is Paris AND hotel_rating > 4 AND price < 200 THEN suggest_booking.

An agent, on the other hand, can handle a vague prompt like: "Plan a 3-day weekend trip to Paris for me next month. I like art museums and want to stay somewhere with character under €200 a night."

The agent would use its LLM core to:

Deconstruct the Goal: Identify key constraints (Paris, next month, art, character, <€200).

Formulate a Plan: Search for flights, browse hotels that match the description "character," find top art museums, check their opening hours, etc.

Execute Tools: Interact with APIs for airlines, hotels, and maps.

Synthesize and Respond: Present a coherent itinerary to the user.

In this scenario, writing rules would be impossible. The agent's behavior is dynamic, goal-oriented, and emergent, making it the perfect tool for complex, multi-step problems that defy rigid logic.

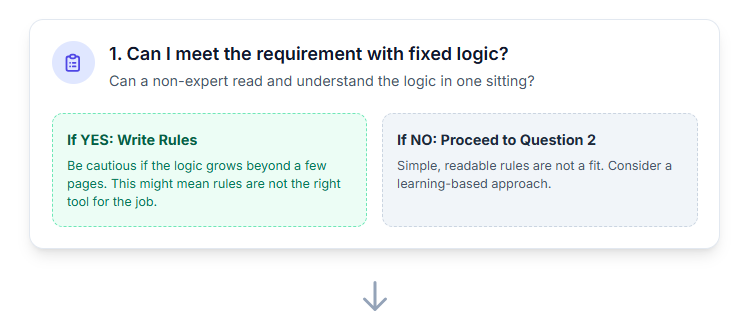

A simple decision framework

Before you choose, answer three questions in order.

Can I meet the requirement with fixed logic that a non-expert can read in one sitting?

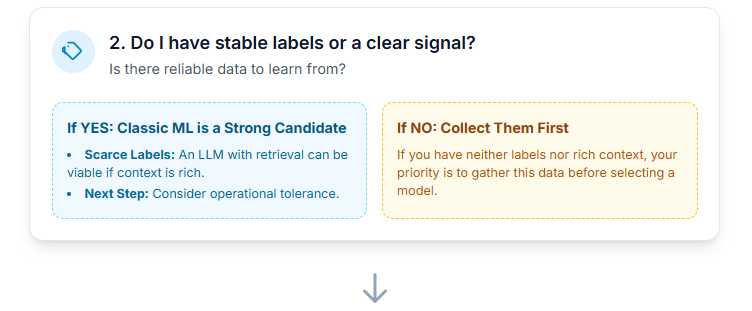

If yes, write rules. If the logic is longer than a few pages and you struggle to name each block neatly, you are probably forcing rules to do a job they are not suited for.Do I have stable labels or a clear signal to learn from?

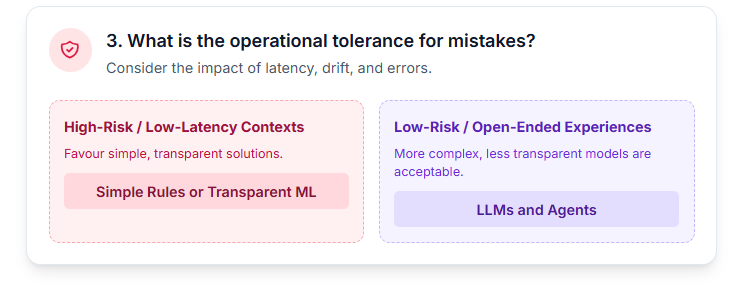

If yes, classic ML is a strong candidate. If labels are scarce but text and context are rich, an LLM with retrieval can be viable. If you have neither labels nor context, collect them first before choosing a model.What is the operational tolerance for mistakes, latency, and drift?

High-risk and low-latency contexts favour simple rules or transparent ML. Open-ended, low-risk experiences can accept LLMs and agents.

Remember, your likes ❤️ and shares 🔄 help me keep this going and bring you the best content week after week.

📖 Book of the Week

“AI Agents in Practice” by Valentina Alto

Go beyond simple chatbots—this book is your guide to building production-ready AI agents that plan, reason, collaborate, and deliver real value.

What you’ll learn

Design and deploy single- and multi-agent systems with frameworks like LangChain and LangGraph

Build agents with memory, context, and tool integrations

Apply orchestration patterns and industry-specific case studies

Implement ethical safeguards and optimize for performance in production

Who it’s for

AI engineers & data scientists ready to move past prototypes

Developers & architects integrating agents into real systems

Product leaders & entrepreneurs exploring business applications of AI

Why read it

You’ll get hands-on tutorials, framework comparisons, and practical design patterns—everything you need to future-proof your AI development.

⚡️Power-Up Corner

The core decision framework tells you what to choose, but the real edge comes from knowing the traps to avoid, the hidden costs to plan for, and the hybrid patterns that actually work in practice. This section is a set of accelerators: lessons learned the hard way, checklists for readiness, and techniques that keep systems robust as they move from whiteboard to production. Treat it as a playbook of “power-ups” you can reach for when the basics aren’t enough.

Here’s what we’ll cover:

Hidden costs and common failure modes – how rules, ML, and LLMs break differently in the real world.

Hybrid patterns that work – pragmatic combinations that balance explainability, adaptability, and control.

Data readiness checklist – how to know when you’re truly prepared for ML or LLM adoption.

Evaluation that maps to reality – testing approaches that reflect production conditions, not lab toys.

Migration path – a sensible, staged evolution from rules to ML to agents.

Case study – anomaly detection in finance ops as a worked example.

Hidden costs and common failure modes

Keep reading with a 7-day free trial

Subscribe to Machine Learning Pills to keep reading this post and get 7 days of free access to the full post archives.